Why Microsoft Copilot Rollouts Stall at 20% Adoption: The Pattern Nobody Talks About

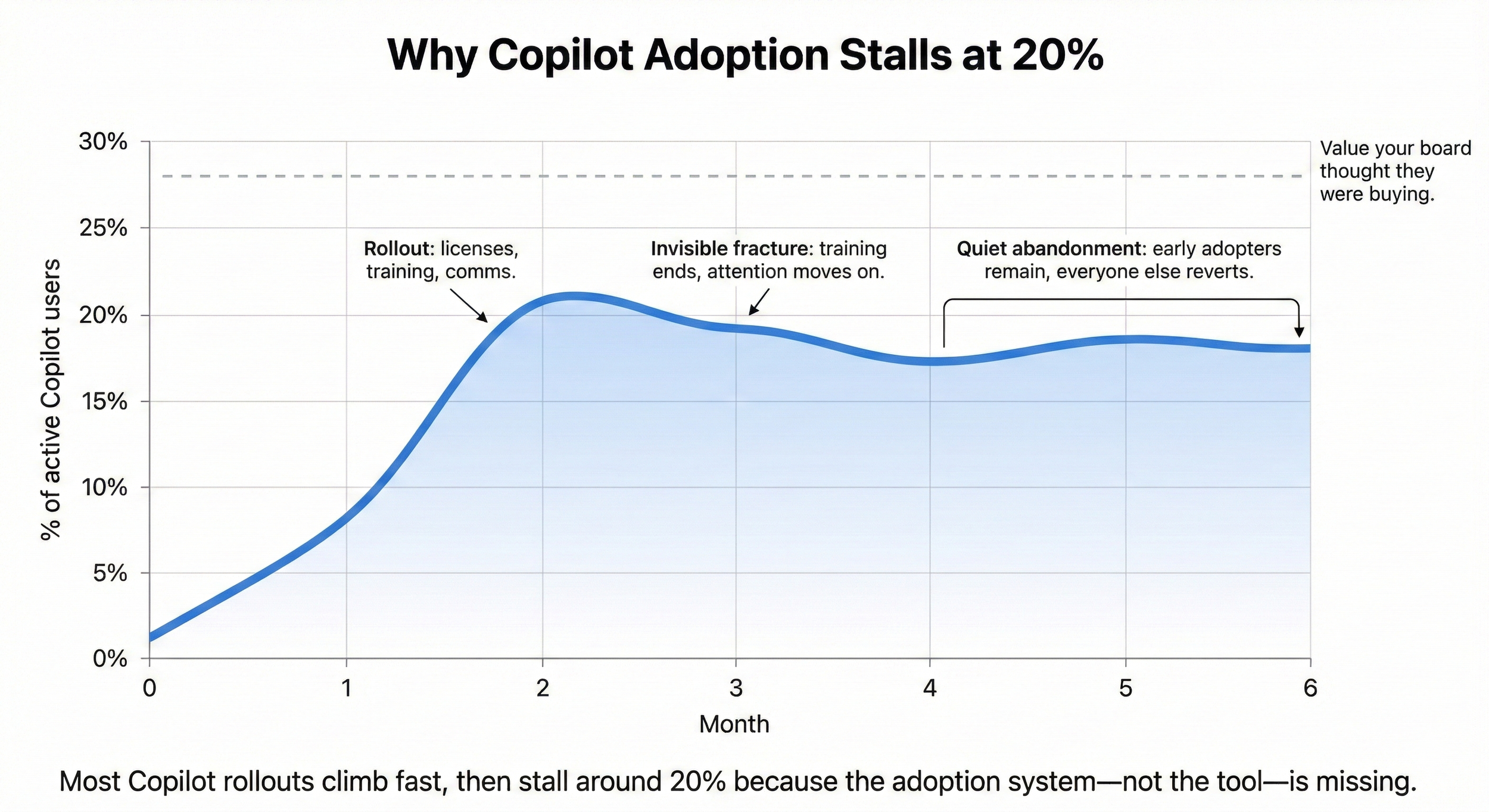

The pattern shows up consistently in Copilot rollouts, UK government pilots, enterprise case studies, Microsoft's own research. Initial enthusiasm. Usage climbs for 6-8 weeks. Then it stalls, typically between 15-25% adoption, often settling around 20%.

I've led digital transformation programs from inside mid-market organizations. I know what this looks like when you're the one responsible for making adoption work, when the consultants have left, the training's been delivered, and usage quietly flatlines. What's invisible in the case studies and pilot reports is why it happens. That's what I now diagnose: the organizational design gaps that training programs miss and deployment teams don't see until it's too late.

What's remarkable isn't that this happens, it's that it happens so predictably, and almost nobody sees it coming. Not the technology team who planned the rollout. Not the L&D team who designed the training. Not the executives who approved the budget.

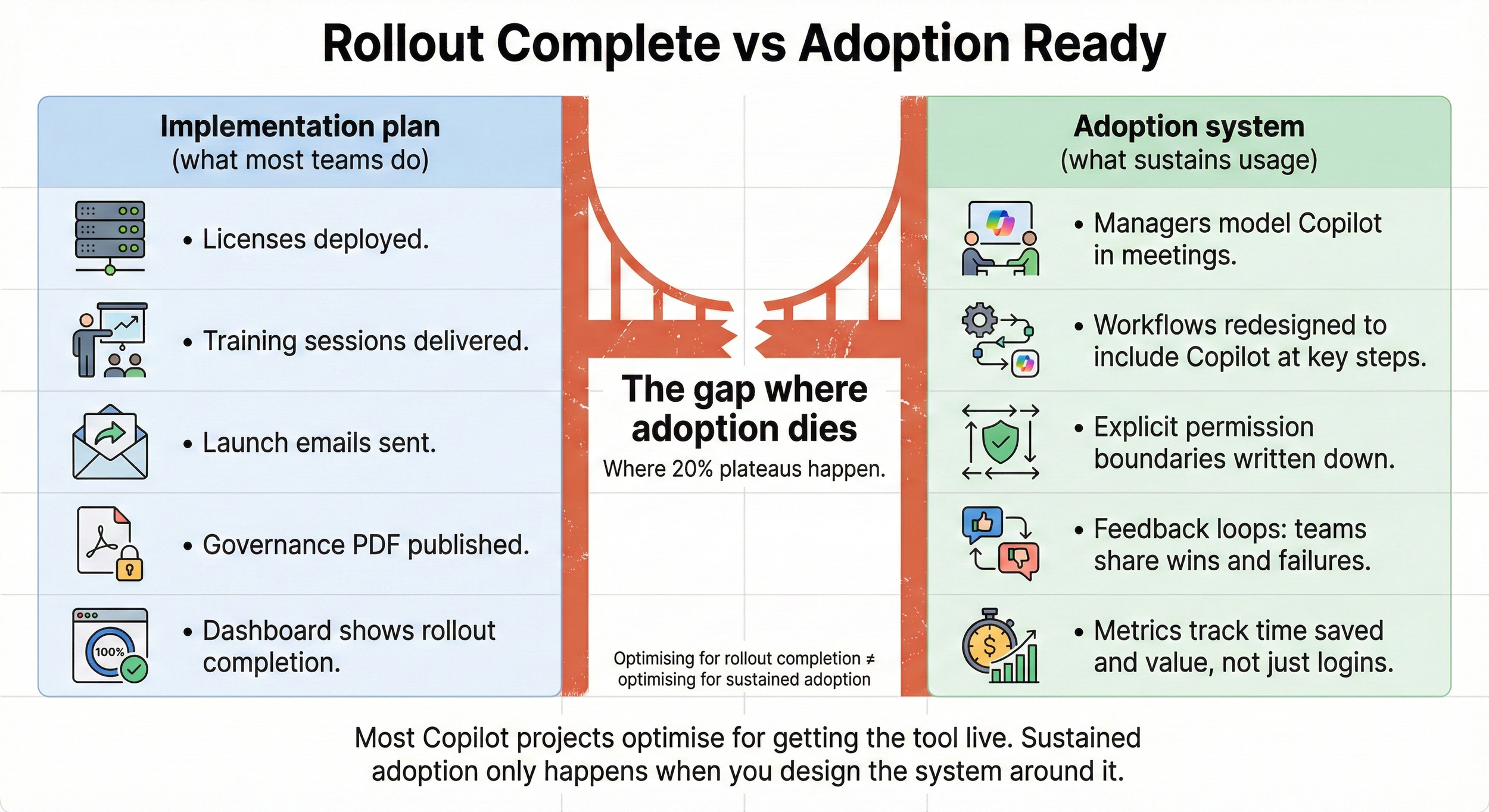

The problem isn't the product. Microsoft Copilot is technically sound. The problem is that organizations redesign the tool rollout without redesigning the adoption system around it. And that invisible gap, the one between 'we rolled out the tool' and 'people sustainably use the tool', is where your adoption dies.

The Real Cost Nobody Calculates

You're paying £25-35 per user per month for licenses that sit unused. For a 1,000-person firm at 20% adoption, that's £240,000 annually on unused software.

Microsoft's own research suggests Copilot users save 10-12 hours per month. At 80% non-adoption, you're leaving 9,600 hours of productivity on the table every month. That's not a rounding error—it's real capacity you paid for but can't access.

And then there's the political cost. You told the board this would transform productivity. Six months later, nobody's using it. Now every future technology decision gets the question: "Will this be like Copilot?"

What makes it harder: you can't just blame the tool. It works—your 20% prove that. You can't blame the people—they went to the training, they tried it, they had good reasons for stopping. You can't even blame the implementation team—they followed the playbook Microsoft provided.

The problem is the playbook itself. It optimizes for rollout completion, not sustained adoption. Those are different outcomes, and almost nobody designs for the second one.

What Happens After Rollout

The standard Copilot rollout looks like this:

Month 1-2: Initial deployment

IT sets up licenses and permissions. L&D delivers training sessions. Communications team sends announcement emails. Early adopters experiment. Usage metrics look promising.

Month 3: The invisible fracture

Training ends. Executive attention moves elsewhere. Users are "on their own." No feedback loop exists to tell them if they're using it well. No workflow redesign to make Copilot easier than the old way. Managers assume "it's working" because they received completion metrics, not usage metrics.

Month 4-6: The quiet abandonment

Users revert to familiar tools. Nobody notices because there's no adoption measurement system. The 20% who stick with it become isolated—seen as "the tech people." Leadership discovers the problem only when someone pulls usage data for a board report.

The pattern I see in organizations is that they design an implementation plan but not an adoption system.

An implementation plan answers: How do we roll this out?

An adoption system answers: How do we make sustained usage the path of least resistance?

They're not the same thing.

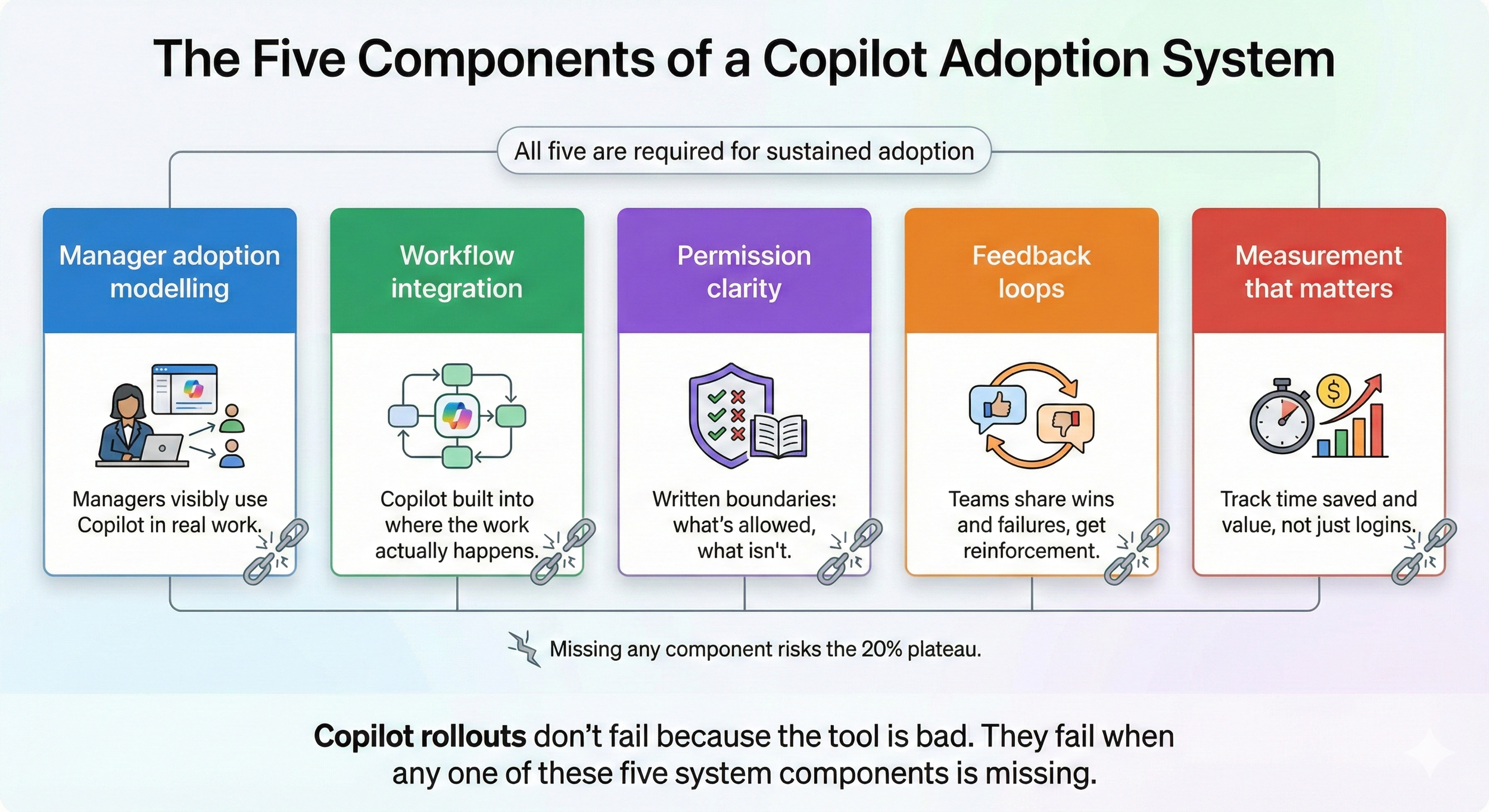

The Five Missing System Components

When I audit stalled Copilot rollouts, I'm looking for five things that should exist but almost never do. These aren't theoretical—I've led transformation programs where the absence of these components killed adoption, and I've seen what happens when they're built properly.

1. Manager adoption modeling

Employees don't adopt tools their managers don't visibly use. But nobody told managers that modeling usage was part of their job. They approved the rollout; they assumed that was enough. It wasn't.

This happens all the time in transformation programs. Managers will be positive initially—they'll attend a launch event, encourage their teams to go to training. But they don't show up to the training themselves. Lack of time is the usual reason given.

What I've observed repeatedly: the managers who don't show up are usually leading the least engaged teams in the organization. It's not a coincidence. Their absence sends a signal louder than any encouragement email.

When managers model usage—actually use the tool visibly in team meetings, share what they learned, show their failures—adoption follows. When they don't, their teams quietly opt out. No amount of training compensates for a manager who signals "this isn't important enough for my time."

2. Workflow integration

Copilot sits inside Microsoft 365 tools. But if your team's daily workflow doesn't naturally open those tools, Copilot never gets a chance. You've added a capability without redesigning the workflow to incorporate it.

I've seen this play out badly and well. The bad version: tools and processes designed purely for HR convenience with little insight into how the organization actually works. Performance management platforms are notorious for this—they get dumped on the organization, and nobody uses them without being berated by their HRBP.

The good version? I've seen it work at multi-site organizations, but it takes tremendous effort and research. You need a team of people working on workflow integration for 6-12 months for something big like a new HRIS. Not just rolling out the tool—actually redesigning how work happens to incorporate it.

For Copilot, this means mapping where your teams actually work. If they live in Salesforce, you need Copilot integrated there. If they live in project management tools, that's where it needs to be accessible. Don't make them leave their workflow to access productivity.

3. Permission clarity

You told people "use Copilot responsibly." They heard "don't make a mistake." In the absence of clear parameters, cautious employees opt out. They're not resisting, they're protecting themselves.

I've watched this pattern destroy adoption repeatedly. Leadership says "everyone is welcome to attend training" or "everyone should use this tool." Then a Head of Department undermines that message—"we need shift coverage" or "we're stretched"—but really they're protecting their budget or their team's time.

Employees hear mixed messages and default to the safest interpretation: don't use it. Especially in regulated environments or client-facing work, ambiguity kills experimentation.

What actually works: explicit boundaries written down. Not "use it responsibly" but "You can use Copilot for internal documents, drafting emails, research summaries. You cannot use it for client contracts until legal confirms." Clarity unlocks experimentation. Ambiguity kills it.

4. Feedback loops

Nobody tells users if they're doing it well. So they assume they're not. Early experiments feel clumsy. Without positive reinforcement, they stop experimenting.

In one digital literacy campaign I led at a 7,000-person organization, we surveyed people about how they liked to receive resources (typically delivered as classroom-style training). 30% said Slack. We opened a Slack channel. In the first month, 1,000 users signed up.

Why? Because it created a feedback loop. People could see what their peers were doing, ask questions, share wins, and learn in real-time. It normalized experimentation. Someone would post "Used Copilot to draft meeting notes, saved 30 minutes" and three other people would try it that afternoon.

Without feedback loops, people experiment once, don't know if they did it "right," and stop. With feedback loops—whether it's a Slack channel, office hours, or weekly team check-ins—learning accelerates and adoption becomes self-sustaining.

5. Measurement that matters

You're tracking "usage"—did they open it? You're not tracking value delivery—did it save them time, improve output, reduce friction? So you don't know what's working.

This happens all the time in transformation programs. People want easy wins. A 36% engagement rate gets declared a success. Complaints get sidelined or excused—the person raising them is labeled pessimistic or difficult.

I've watched organizations celebrate "45% adoption" when the actual pattern was: 45% of people opened the tool once, tried something, decided it wasn't worth it, and never came back. That's not adoption—that's failed experimentation being rebranded as success.

The measurement shift that matters: stop counting logins. Start asking "Did this save you time? Improve your work? Reduce friction?" Track outcomes, not activity. Otherwise, you're measuring the wrong thing and missing the actual adoption failure.

Why Your Leadership Team Missed This

Your leadership team optimized for the wrong success metric.

They measured: Rollout completion

✓ Licenses deployed

✓ Training delivered

✓ Communications sent

✓ Governance framework published

What they should have measured: Adoption system readiness

✗ Do managers understand their role in modeling usage?

✗ Have workflows been redesigned to incorporate Copilot?

✗ Can employees articulate clear permission boundaries?

✗ Does a feedback loop exist to reinforce good usage?

✗ Do we measure value delivery, not just tool access?

Nobody is incompetent here. This happens because adoption systems are invisible until they're not there.

Three assumptions that kill adoption

Assumption 1: "Training equals adoption"

Training teaches how to use the tool. It doesn't create the conditions where using it is easier than not using it. Those are different problems.

I've seen firms deliver exceptional training—clear, practical, well-attended. Adoption still stalled. Why? Because the training answered "how does this work?" but didn't solve "why is reverting to the old way still easier?"

Assumption 2: "If people need it, they'll use it"

Only if using it is frictionless. If reverting to the old way is easier—even slightly—they revert. Humans optimize for cognitive ease, not theoretical productivity.

This isn't laziness. It's how brains work under time pressure. When someone has 47 things on their task list, they default to the method that requires the least mental overhead. If Copilot adds friction (even one extra click, one moment of uncertainty about permissions, one unanswered question about whether they're doing it right), they revert.

Assumption 3: "Technology problems have technology solutions"

This isn't a technology problem. Copilot works. This is an organizational design problem. You've inserted a new capability into an unchanged system. The system rejected it.

I keep telling clients: you don't have an AI problem. You have an adoption system problem that's surfacing through AI. The same gaps would kill any tool rollout. Copilot just made them visible.

Why this is hard to see from inside

You're too close to it. When you're inside the organization:

You know the strategy (employees don't)

You know the training was optional or mandatory (creates different adoption psychology)

You know what leadership meant by 'use responsibly' (employees interpreted it differently)

You know the governance is actually permissive (employees experienced it as restrictive)

You know adoption is strategically important (employees think it's just another tool)

The gap between leadership's mental model and employees' lived experience is where adoption dies.

You need an external diagnostic perspective—not because you're not smart enough to figure it out, but because you can't see your own organizational blind spots. It's the same reason therapists have therapists and editors need editors. Proximity creates distortion.

What Needs to Shift

Based on leading transformation programs and analyzing stalled rollouts, this is what actually needs to happen. Not a checklist—a system redesign.

Reframe manager responsibility

Managers need to understand: adoption is now part of their job. Not "encourage your team to try it." Actively model it. Use Copilot visibly in team meetings. Share what you learned. Show failures. This isn't optional,it's leadership.

The shift: from "I approved this" to "I demonstrate this."

Redesign workflows, not just add capabilities

Map where your teams actually work. If they live in Salesforce, bring Copilot into Salesforce workflows. If they live in Slack, integrate there. Don't make them leave their workflow to access productivity.

I had one firm redesign their morning stand-up structure to include a 60-second "what did you use Copilot for yesterday?" rotation. Not to police usage—to normalize it and accelerate collective learning. Usage jumped 34% in six weeks. Not because people learned new features. Because they learned what their peers were doing and thought: "I could try that."

Articulate explicit permission boundaries

Write down exactly what's allowed. Not "use it responsibly"—that's meaningless. Say: "You can use Copilot for internal documents, drafting emails, research summaries. You cannot use it for client contracts until legal confirms." Clarity unlocks experimentation.

This sounds bureaucratic. It's actually the opposite. Ambiguity kills experimentation. Boundaries create safety.

Build feedback loops

Create spaces where people share what worked. Weekly team check-ins: "Who used Copilot this week? What did you learn?" Not to police usage, to normalize experimentation and accelerate learning. When people can see what their peers are doing and learn from both successes and failures, adoption accelerates organically.

Measure value, not activity

Stop counting "number of times Copilot was opened." Start asking: "Did this save you time? Improve your output? Reduce friction?" Track outcomes, not activity.

I worked with one firm that switched from measuring "daily active users" to measuring "tasks where Copilot demonstrably saved time." The numbers dropped initially (fewer people qualified under the new definition), but the quality of adoption skyrocketed. They stopped celebrating vanity metrics and started celebrating actual value delivery.

What This Isn't

This is not:

Retraining everyone. Training wasn't the problem. I've seen firms do three rounds of training. Usage still stalled. More training doesn't fix a system design gap.

Buying better technology. Copilot works. The product is sound. You don't need a different tool—you need a different adoption approach.

Waiting for people to 'get on board.' They're not resistant. They're navigating an unclear system. Resistance and confusion look similar from a distance but require completely different responses.

Change management grandstanding. Posters and town halls don't fix system design. I've seen firms spend tens of thousands on internal communications campaigns. Adoption moved 3%. Why? Because the underlying system blockers remained untouched.

This is system redesign. It requires diagnosis of what's actually broken, not assumptions about what 'should' work.

The Trade-Off Nobody Tells You

Fixing this takes executive attention and manager time. You'll need:

2-6 months of sustained focus

Manager enablement (not training—enablement in how to model, measure, and support adoption)

Workflow redesign work

Measurement system changes

That's real investment. Not as much as the initial rollout cost, but not trivial either.

The alternative is continuing to pay for unused licenses and explaining to the board why your transformation initiative failed.

Most organizations choose not to fix it. They declare victory at 20% and move on. That's a choice, but it's an expensive one.

I'm not here to tell you which choice is right. I'm here to tell you those are the actual choices, not the sanitized version where "a bit more effort" magically fixes it.

The Four Ways Organizations Try to Fix This (And Why They Fail)

I've watched firms attempt the same four responses. None of them work.

"More training will fix it"

They retrain everyone. Same content, different presenter. Usage stays flat. Why? Training wasn't the blocker. System design was.

I sat through one of these retraining sessions. The trainer was excellent. The content was clear. People paid attention, took notes, asked good questions. Three weeks later, usage was identical to pre-training levels.

When I interviewed participants afterwards, every single one said: "The training was interesting. But my actual work hasn't changed, my manager still doesn't use it, and I still don't know if I'm allowed to use it for work."

Training can't fix system problems.

"Mandate usage and measure compliance"

Leadership declares "everyone must use Copilot for X task." Compliance goes up. Value delivery stays low. Employees do the minimum required, then revert.

I watched one firm mandate that all meeting notes be written using Copilot. Compliance hit 78% within a week. But the notes were worse—generic, missing context, clearly copy-pasted from AI output without thought. People were gaming the metric, not adopting the tool.

Mandates produce compliance, not capability.

"Identify champions to evangelize"

They appoint "Copilot champions" to spread enthusiasm. Champions get excited. Nobody else cares. The gap between champions and everyone else widens.

I've seen this create resentment. Champions become "those people who won't shut up about Copilot." Normal employees tune them out. Worse, it signals to managers that adoption is someone else's job, the champions will handle it.

Champions are useful for answering questions. They're terrible at fixing system design problems.

"Wait for the next version to fix it"

They assume the next Microsoft update will solve it. It won't. The product isn't the problem.

I had one client postpone their adoption recovery work for four months waiting for a Copilot feature update. The update shipped. Adoption stayed flat. Why? Because the feature added capability, not clarity about workflows, permissions, or manager modeling.

Waiting for a product fix when you have a system problem is just expensive procrastination.

Why These All Fail

They treat adoption as a communication or motivation problem. It's not. It's a system design problem.

Your employees aren't resistant. They went to training. They tried it. They had good reasons for stopping:

Their manager doesn't use it (social proof missing)

Their workflow makes it harder to use than the old way (friction too high)

They don't know what's allowed (permission ambiguity)

Nobody confirmed they're doing it right (feedback loop missing)

They can't tell if it's saving time or creating work (measurement gap)

Those aren't motivation problems. Those are design problems.

And design problems require diagnosis, not enthusiasm.

When 20% Adoption Might Be Fine

Some will argue 20% adoption is actually success—that you only need power users, not universal adoption. They're not entirely wrong.

If your strategy is:

Concentrate Copilot in specific roles where ROI is highest (analysts, content creators)

Accept that other roles won't benefit meaningfully

Optimize for depth of usage, not breadth

Then 20% might be the right answer.

But that's rarely the strategy organizations articulated when they bought licenses for everyone. Most firms rolled out broadly, expecting broad adoption. When adoption stalls at 20%, it's not because they decided that was optimal—it's because the system broke and they don't know how to fix it.

The honest diagnostic question is: Did you design for 20%, or did you get stuck at 20%?

If you designed it, you're done.

If you got stuck, you've got a system problem to diagnose.

What to Do If You're Seeing This Pattern

If you're reading this and recognizing your own rollout, you're not alone. This pattern is predictable, not exceptional.

Your training wasn't bad. Your people aren't resistant. Your technology choice wasn't wrong.

What's missing is diagnosis.

You need to understand:

Which of the five system components is actually broken in your context

What your managers think their role is (versus what it needs to be)

Where your workflows create friction (versus where Copilot integrates naturally)

What permission ambiguity exists (that you can't see from inside)

What your current metrics hide (versus what they should reveal)

That's what a forensic adoption audit does. It diagnoses what's actually broken—not what 'should' be broken according to a playbook, but what's broken in your specific organizational context.

No obligation. Just diagnostic clarity.

Paul Thomas is a behavioral turnaround specialist focused on failed AI adoption in UK mid-market firms. After leading digital transformation programs as an HR leader—and watching technically sound rollouts stall for organizational reasons—he now diagnoses why GenAI implementations fail. His forensic approach examines governance gaps, workflow friction, and managerial adoption patterns that external consultants and training programs miss. He works with HR Directors, Finance leaders, and Transformation leads who need diagnostic clarity before committing to recovery. He also writes and publishes The Human Stack - a weekly newsletter with more than 5000 readers on how to lead well in the era of GenAI.

AI Adoption: Using the ADKAR Framework to Make Change Stick

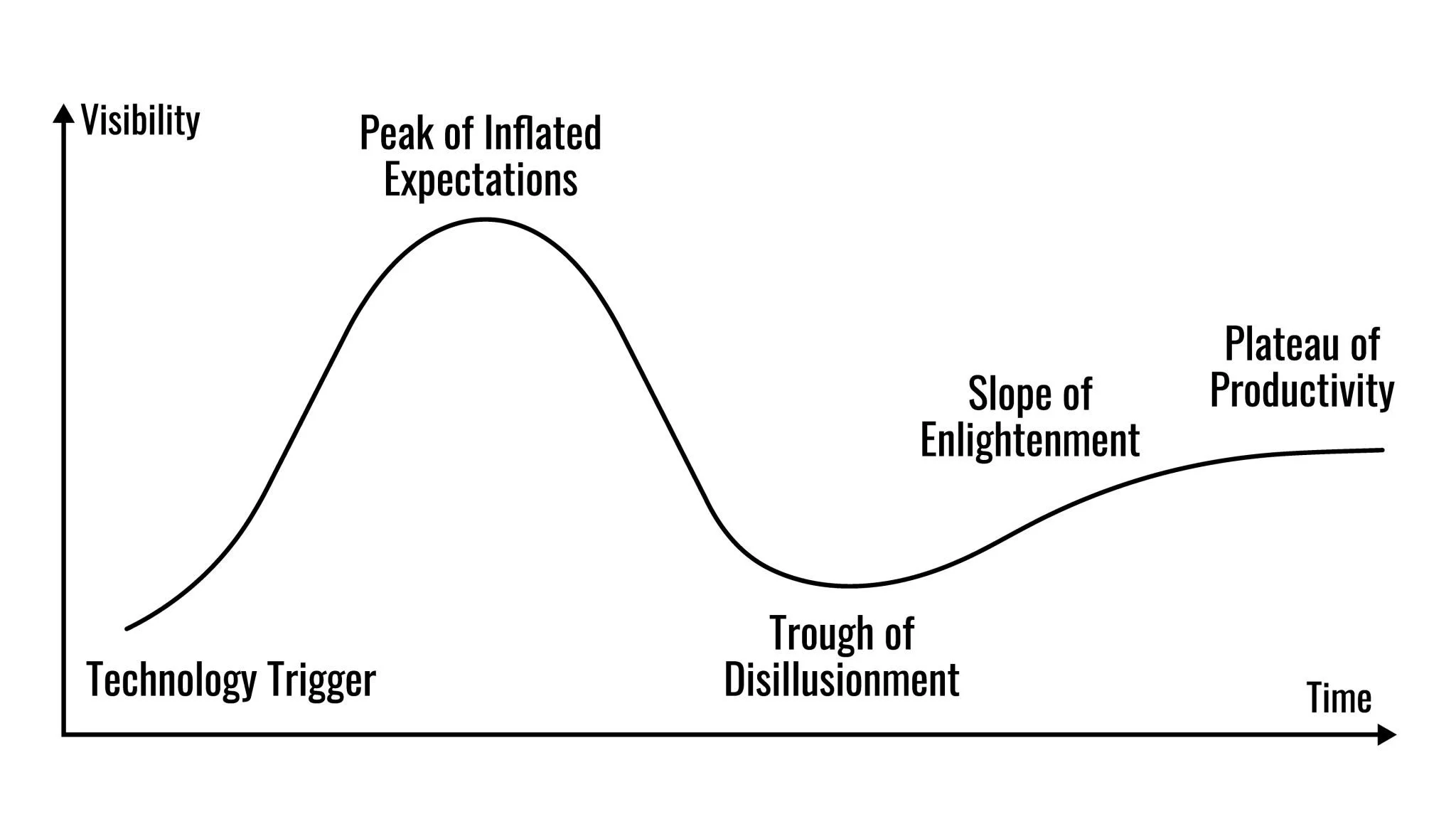

Everyone's talking about AI tools. Leadership teams are excited about productivity gains. Vendors are promising transformation. And yet, here's what the data actually shows: around 90% of AI implementations fail to deliver their expected value.

That's not a technology problem. It's a people problem.

I've spent months working with mid-market UK firms on AI adoption, and the pattern is consistent. The organisations that succeed aren't the ones with the biggest budgets or the flashiest tools. They're the ones that treat AI adoption as a change management challenge: not a tech rollout.

Enter the ADKAR framework. It's not new, it's not sexy, and it won't get you excited at a conference. But it works. And if you're serious about making AI stick in your organisation, it's worth understanding why.

The Real Reason AI Rollouts Fail

Let's be honest about what's happening in most UK organisations right now.

Leadership sees the potential. They read the headlines, attend the webinars, and get genuinely excited about what AI could do for efficiency, decision-making, and competitive advantage. They greenlight a Copilot rollout or invest in a shiny new platform.

Meanwhile, employees are worried. They're reading different headlines: ones about job losses, skills gaps, and machines replacing humans. A recent analysis shows that while executives see opportunity, frontline workers see threat.

This worry versus excitement gap is killing your AI initiatives before they even get started.

You can have the best AI tools on the market. But if your people don't understand why they're being asked to change, don't want to change, or don't know how to change: you've wasted your investment.

What Is the ADKAR Framework?

ADKAR is a change management model developed by Prosci. It stands for:

Awareness – Understanding why the change is happening

Desire – Wanting to participate in the change

Knowledge – Knowing how to change

Ability – Demonstrating the skills and behaviours required

Reinforcement – Making the change stick long-term

Unlike process-focused change models, ADKAR puts individuals at the centre. It recognises that organisations don't change: people do. And people change in a specific sequence.

Skip a step, and you'll hit resistance. Push too fast, and you'll lose people. This is why so many AI rollouts stall after the initial excitement fades.

Applying ADKAR to AI Adoption

Here's what each stage looks like in practice when you're rolling out AI tools across your organisation.

1. Awareness: Why Are We Doing This?

Before anyone learns a new tool, they need to understand why it matters. This isn't about sending a company-wide email announcing "we're adopting AI." It's about answering the questions your people are actually asking:

Why now?

What problem does this solve?

How will this affect my job?

Most organisations skip straight to training. Big mistake. If employees don't understand the business case: or worse, if they think AI is being brought in to replace them: you've already lost them.

What this actually requires:

Transparent communication about the strategic reasons behind AI adoption

Honest conversations about what will and won't change in people's roles

Leadership visibility: not just an announcement from IT

The failure to build awareness early is one of the most common mistakes in AI change management.

2. Desire: What's In It For Me?

Awareness gets people's attention. Desire gets their buy-in.

This is where you need to shift from "here's why the company needs AI" to "here's why you might actually want this." And that means addressing fears head-on.

Most employees aren't resistant to technology. They're resistant to uncertainty. They want to know:

Will this make my job easier or harder?

Am I going to look incompetent while I learn?

Is this just more work on top of everything else?

What this actually requires:

Showing how AI reduces tedious tasks rather than adding complexity

Identifying early adopters who can model positive experiences

Creating psychological safety around experimentation and mistakes

Addressing the "what about my job?" question directly

You can't manufacture desire through mandates. You build it through trust and relevance.

3. Knowledge: How Do I Do This?

Once people want to engage, they need to know how. This is the training stage: but it's not just about clicking through an e-learning module.

Effective AI training looks different from traditional software training. AI tools are probabilistic, not deterministic. They require judgement, context, and iteration. Your people need to understand not just which buttons to press, but how to think about AI as a collaborator.

What this actually requires:

Practical, role-specific training (not generic overviews)

Clear documentation and quick-reference guides

Access to coaching and support during the learning curve

Permission to experiment without fear of breaking things

The UK's AI training gap is well-documented: 78% of workers are using AI, but only 24% have received formal training. That's a Knowledge gap waiting to cause problems.

4. Ability: Can I Actually Do This?

Knowledge and ability aren't the same thing. I can know how to play the piano in theory. That doesn't mean I can perform at a concert.

Ability is about translating knowledge into practice. It's where rubber meets road: and where many AI initiatives quietly fail.

This stage requires patience. People need time to fumble, make mistakes, and build confidence. They need space in their workload to actually practice. And they need support when they get stuck.

What this actually requires:

Protected time for learning and experimentation

Removal of conflicting priorities and outdated systems

Identification and support for those struggling to adapt

Realistic expectations about the learning curve

If your people are expected to adopt AI while maintaining their full workload with no additional support, you're setting them up to fail.

5. Reinforcement: Making It Permanent

Here's where most organisations drop the ball completely.

They run a training programme, see some initial adoption, declare victory, and move on. Six months later, people have quietly reverted to their old ways of working.

Reinforcement is about making the change stick. It means embedding AI into how work actually gets done: not as an optional extra, but as the default.

What this actually requires:

Integrating AI usage into performance expectations and reviews

Celebrating wins and sharing success stories

Addressing backsliding quickly and supportively

Continued leadership visibility and commitment

Regular check-ins to identify new barriers or skill gaps

Behavioural change doesn't happen overnight. It requires consistent reinforcement over time.

Using ADKAR to Diagnose Resistance

One of the most useful things about ADKAR is its diagnostic power.

When someone isn't adopting AI, you can pinpoint exactly where they're stuck:

Not using it at all? Probably an Awareness or Desire gap.

Using it badly? Probably a Knowledge gap.

Trying but struggling? Probably an Ability gap.

Used it for a while then stopped? Probably a Reinforcement gap.

This lets you target your interventions rather than throwing generic "more training" at everyone. Different people in different departments will face different barriers. ADKAR helps you identify and address them specifically.

The Bottom Line

AI adoption isn't a technology project. It's a people project.

The organisations that get this right: that treat AI as a change management challenge requiring structured support through Awareness, Desire, Knowledge, Ability, and Reinforcement: are the ones that actually capture the value everyone's promising.

The rest will join the 90% who invested in tools their people never properly used.

If you're a UK business leader planning an AI rollout, or struggling with one that's stalled, start by asking: where are my people actually stuck? The answer will tell you exactly where to focus next.

Ready to get AI adoption right in your organisation? Explore our practical resources for managers and L&D professionals, or read more about why AI implementations fail in UK organisations.

What is Agentic AI? A Complete Guide to Autonomous AI Agents in 2026

Quick Definition: Agentic AI refers to autonomous artificial intelligence systems that independently set goals, plan strategies, and execute tasks across multiple tools—unlike traditional AI assistants that require human prompts for each action.

In March 2023, Bill Gates published a landmark memo titled “The Age of AI has begun.” He compared the emergence of generative AI to the creation of the graphical user interface, a fundamental shift in how humans interact with machines.

He was right, but he was describing the starting gun, not the finish line.

If 2023 was the “Age of the Chatbot” a time of passive assistants and helpful drafts, then 2026 marks a far more radical transition: The Age of the Agentic.

We have spent the last three years treating AI as a “Co-pilot” someone who sits in the passenger seat offering directions. But as we enter 2026, the technology is moving into the driver’s seat.

We are shifting from tools that answer questions to systems that execute goals.

What Makes Agentic AI Different: From Helper to Worker

To navigate this landscape, we first need to understand the technology driving it.

For the last few years, we have been living in the era of Generative AI, tools like ChatGPT that act as incredibly smart assistants. You give them a prompt, they give you an answer. Microsoft engineered the narrative of AI as a “Co-pilot”. The AI aided our decision-making, but our hands were still on the proverbial wheel.

But 2026 marks the transition to Agentic AI. Unlike their predecessors, Agentic systems possess the capability for autonomous goal-setting, strategic planning, and adaptive behavior.

You don’t just ask an agent to “write an email”; you give it a goal, and it executes the entire workflow.

Think of this as the death of “glue work.” In the past, human intelligence was required just to glue different apps together—copying data from a PDF, pasting it into Excel, and emailing a summary. Agentic AI removes that friction. It doesn’t just generate text; it connects tools.

I recently started experiencing this firsthand with a platform called Marblism. While some of its features aren’t quite as revolutionary as the marketing copy would suggest - look out for Thursday’s post for more on that - the utility is undeniable.

Marblism currently handles my inbox, manages my calendar, optimizes my SEO, and even takes calls on my behalf. (Give it a try yourself by calling my agentic assistant Rachel at +44 114 494 0377). I have six “people” in my team, and I am paying barely $20 a month.

This shift changes AI from a productivity enhancer to a potential job displacer and the numbers backing this are sobering.

Research by Gravitee, based on a survey of 250 C‑suite executives at large UK firms, forecasts that about 100,000 AI agents could join the UK workforce by the end of 2026, with 65% of firms expecting to reduce headcount over the same period.

Major players like Amazon have internal documents revealing plans to avoid hiring over 160,000 workers by 2027 through automation.

Meanwhile as businesses struggle to adapt to rising rates and costs unemployment in the UK is predicted to rise to 5.5%, the highest it’s been in eleven years.

The Digital Assembly Line

The disruption we are facing isn’t just about individual bots doing individual tasks. It is about what happens when those bots start talking to each other.

A recent extensive report from Google Cloud on 2026 trends describes this as the rise of the “Digital Assembly Line.”

We are seeing the emergence of the Agent2Agent (A2A) protocol, a standard that allows agents to hand off tasks to one another without human bottlenecking. Imagine a Sales Agent that finds a lead and hands it to a Research Agent to qualify it, who then triggers a Legal Agent to draft a contract.

This isn’t sci-fi; it’s the emerging infrastructure of a new kind of work. We are moving from a world where people talk to software, to a world where software talks to software. In this model, the human role shifts from “worker” to “foreman”, the person who designs and oversees the factory floor rather than the one tightening the bolts.

The Shift to “Intent-Based” Computing

This technological shift is forcing an existential shift in how we define “work.” According to Google’s analysis, we are moving from Instruction-Based to Intent-Based computing.

For the last 40 years, professional value was defined by “Instruction”: knowing how to write the Python script, how to format the pivot table, or how to draft the legal clause. You were paid for the how.

In the Agentic Era, the machine handles the how. You are paid for the Intent, defining the what and the why.

This new employee workflow consists of four distinct steps:

Delegating (identifying the task)

Setting Goals (defining the outcome)

Outlining Strategy (adding human nuance)

Verifying Quality (acting as the final checkpoint)

If your current skill set is purely “Instructional” executing the steps rather than defining the strategy, you might want to start think about re-skilling.

The Vanishing Entry-Level & The “Closed Loop”

Jack Clark, Co-founder of Anthropic, describes domains like coding as “closed loop”: you use an LLM to generate or tweak code, then automatically run tests and iterate based on feedback with relatively little human intervention.

With agents increasingly taking over mundane, repetitive, multi‑step workflows, with humans shifting into roles that focus on delegating tasks, setting goals, and verifying quality rather than doing every step themselves.

Put together, these trends suggest that many traditional junior tasks—basic coding, document drafting, and routine data verification, are among the first work items to be automated by agentic systems, because they are easy to specify, easy to check, and lend themselves to closed‑loop execution.

The risk is not that all entry‑level jobs vanish overnight, but that enough routine “grunt work” is automated that a new kind of broken rung appears on the career ladder: there are fewer opportunities to learn by doing low‑level work and observing how senior people make decisions, which has historically been the apprenticeship‑by‑osmosis path into many professions.

The Skills Lifespan Crisis: The Floor, Not the Ceiling

Compounding this is the acceleration of skill obsolescence. In the past, a solid degree or a technical certification could sustain a career for a decade or two.

That stability is gone. The World Economic Forum’s latest Future of Jobs analysis suggests that around 60% of workers worldwide will need significant upskilling or reskilling by 2027. The data from the tech sector is even more brutal: the “half-life” of a professional skill—the amount of time that skill remains relevant—has plummeted to just two years.

Think about that. By the time you finish a two-year master’s degree, the technical skills you learned in the first semester may already be obsolete. We are entering an era where “learning” isn’t a phase of life you complete in your 20s; it is a weekly requirement for economic survival.

The danger here is complacency. We often judge AI by its current limitations.

Three years ago, when ChatGPT first became available, I used it to create learning materials. I smugly told myself that AI could never coach or replace me as a facilitator, just read the How People Use ChatGPT report from September last year to see how that’s going for me.

I fell into a common trap: judging the technology by its current flaws rather than its trajectory. As Patrick McKenzie put it in a recent debate on the future of AI:

“What you see today is the floor, not the ceiling.”

We look at a chatbot hallucination or a clumsy agent error and think, “I’m safe.” But Anthropic’s team reminds us of a chilling reality: “This is the worst it will ever be.”

If an agent can do 50% of your job badly today, you must assume it will do 90% of it perfectly by 2027. Betting on AI’s current flaws to save your job is a strategy with an expiration date.

Final Thoughts

I know this is heavy. When I published “The Decline of the Knowledge Worker” last week, 60 people unsubscribed, significantly higher than my usual churn. I suspect this post might trigger a similar exodus.

If you’re feeling pessimistic, I get it. But here’s the thing, we’re standing at the edge of the biggest reshuffling of economic opportunity in a generation.

The people who lose out won’t be the ones lacking credentials or experience, they’ll be the ones who assumed their current skills were permanent assets. The winners will be those who see this moment for what it is: not the end of meaningful work, but the end of work-as-usual.

Every major technological shift creates winners and losers. The difference isn’t talent or luck, it’s timing and adaptability. The printing press didn’t eliminate writers; it eliminated scribes who refused to learn the new tools. The spreadsheet didn’t kill accountants; it killed the ones who insisted on paper ledgers.

This wave is bigger, faster, and more fundamental. But the same principle applies.

Next week, we’ll flip this conversation on its head. We’ll look at what it actually takes to thrive in the Agentic Era, not just survive it. Because while the ground is shifting beneath us, the people who learn to navigate instability will build careers that are more resilient, more valuable, and frankly, more interesting than anything the old world offered.

The question isn’t whether AI will change your job. It’s whether you’ll be ready when it does.

Thanks to The Substack Post for their amazing piece The AI revolution is here. Will the economy survive the transition? which helped inspire parts of today’s post. It features some of the biggest names in the business - Michael Burry, Jack Clark, Dwarkesh Patel, and Patrick McKenzie - and covers so much more than I could hope to do.

You can also download the AI Agent Trends 2026 Report - here

Frequently Asked Questions About Agentic AI

What exactly is agentic AI?

Agentic AI refers to autonomous artificial intelligence systems that can independently set their own goals, develop strategies to achieve them, and execute complex multi-step workflows across multiple tools with minimal human intervention. Unlike traditional chatbots that respond to individual prompts, agentic AI systems operate continuously and independently.

What is the difference between an AI agent and agentic AI?

AI agents are a broader category of autonomous software systems that have been around for decades. Agentic AI is a modern subset of AI agents—specifically, large language model-powered autonomous systems. All agentic AIs are AI agents, but not all AI agents are agentic. Traditional AI agents might follow pre-programmed rules, while agentic AI systems use LLMs to understand context, adapt, and make intelligent decisions.

How is agentic AI different from ChatGPT?

ChatGPT is a generative AI tool that responds to user prompts and generates text, but it doesn’t take autonomous action. You ask it a question, and it provides an answer. Agentic AI goes further—you give it a goal (like “optimize my calendar and email inbox”), and it automatically executes the entire workflow across multiple tools without needing a prompt for each step.

What are real-world examples of agentic AI systems?

Marbism is one example—it autonomously manages inboxes, calendars, optimizes scheduling, handles SEO optimization, and even takes calls. Other emerging examples include N8N (workflow automation with AI), systems for autonomous data analysis, customer service automation, HR workflow management, and code generation with automatic testing and iteration.

What is N8N AI agent?

N8N is a workflow automation platform that increasingly incorporates AI agents. It allows you to build and automate multi-step workflows where agentic AI systems can handle complex, sequential tasks across different applications and tools without manual intervention.

Can agentic AI replace human jobs?

This is one of the key concerns. Research from Gravitee suggests around 100,000 AI agents could join the UK workforce by 2026, with 65% of firms expecting headcount reductions. However, rather than complete replacement, we’re more likely to see job transformation—roles shifting from “doing the work” to “overseeing and verifying the work.” The question isn’t whether AI will change your job, but whether you’ll be ready when it does.

What are the key capabilities that define agentic AI?

Agentic AI systems possess four core capabilities: (1) autonomous goal-setting, (2) strategic planning and reasoning, (3) adaptive behavior and learning, and (4) tool integration—the ability to use multiple applications and services to accomplish objectives.

Is agentic AI the same as RPA (Robotic Process Automation)?

No. RPA automates repetitive, rule-based tasks through scripted workflows. Agentic AI is more flexible and intelligent—it can understand context, make decisions, adapt to changes, and reason about complex problems. RPA is like following a script; agentic AI is like having an intelligent employee who understands the goal and figures out how to achieve it.

The AI revolution is here. Will the economy survive the transition?

How to Train Employees on AI in 2026: Fixing the Adoption Gap

Since 2024, there's been a massive surge in organizations training employees on AI. Yet by the end of 2025, much of that learning has evaporated into the ether of "L&D completion rates." It's not for lack of trying. UK workers are willing to learn, 89% say they want AI skills. The tools aren't the problem either; the beauty of LLMs is that they're easier to use than almost any enterprise software in history.

But somewhere between the workshop and the workflow, things fall apart.

People attend the session, nod along, maybe try a prompt or two, and then go back to doing things the old way. 97% of HR leaders say their organisations offer AI training, but only 39% of employees report having received any. That is a 58-point gap between what HR leadership believes is happening and what the workforce is actually experiencing.

This article breaks down why most AI training fails, what actually works based on real implementations, and exactly what we're doing differently at The Human Co. for the year ahead. You'll come away with a clear diagnosis of your own adoption gaps, concrete steps you can take immediately, and a framework to ensure your 2026 budget actually moves the needle.

Let's start with what's not working, and why.

The Four Reasons AI Training Falls Flat

Even when organizations do invest in training, they usually invest in the wrong things. The 58-point perception gap tells you something has gone badly wrong between intention and execution.

Here are the four specific traps organizations fall into when they try to train employees on AI:

1. The "One-and-Done" Workshop

The workshop model makes sense from an HR perspective: get everyone trained quickly, minimize disruption, and check the compliance box. But it ignores how human memory works.

Without immediate application, people forget what they've learned within weeks. I've watched this happen repeatedly. Someone attends a two-hour workshop on any given topic, feels energized, and tries a few things. But then work gets busy. A month later, the capability has evaporated.

2. Generic, Role-Agnostic Content

Sales, Finance, Operations, and HR all sitting through the exact same session on "Ethical AI Use."

It makes administrative sense. It's terrible for adoption.

A marketing manager doesn't need the same AI capabilities as a data analyst. When training is generic, people tune out. They sit through examples that don't relate to their reality and walk away thinking, "That was interesting, but not for me."

Skills England found that this kind of generic provision creates significant barriers, with many employers hesitating to commission AI training because of confusion over AI terminology—in some cases assuming it means coding and machine learning, in others expecting dashboards or compliance content, when their teams actually need practical, role-specific skills.

When training does not speak to a specific role, it is easily treated as general interest and quickly forgotten.

3. The "Menu" Problem

Most AI training starts with: "Here's how to use Copilot."

You learn the interface, how to write a prompt, maybe a few advanced features. It's the equivalent of teaching someone Excel by walking through every single menu option, one by one.

The problem? People learn the buttons, not the solutions.

Imagine you're an L&D manager. You train 200 people on "Prompt Engineering Basics." The content is solid. Yet three months later, usage is non-existent. When you ask why, the answer is consistent: "I don't know when I'd actually use this."

They learned how to operate the tool. They never learned what problems it could solve.

4. Measuring Inputs when you should be measuring outcomes

This one frustrates me the most because it's so common, and I'll admit, I've been guilty of it in my own career.

Organizations love to track completion rates. How many people attended? How many finished the module? These numbers look great on a dashboard. They keep the boss happy.

But completion rates measure whether training happened. They don't measure whether anything changed.

Meanwhile, UK employers’ training expenditure has fallen 10% in real terms since 2022, dropping from £59bn to £53bn and reaching its lowest level in more than a decade. Organizations are spending less, measuring inputs instead of outcomes, and then wondering why their AI adoption flatlines.

You are tracking attendance, but all you are really measuring is performativity.

The Underlying Problem

These failures share a common thread: they treat learning how to train employees on AI as a knowledge transfer problem. Learn the tool, check the box, move on.

But the real issue is deeper. Organizations are teaching people about AI when they should be redesigning workflows around AI. That's not a training problem—it's a change management problem.

Deloitte's CTO Bill Briggs recently shared a stat that illustrates this perfectly: 93% of AI budgets flow to technology—models, chips, software. Only 7% goes to the people, culture, workflow redesign, and training required to use it. But even that 7% is being spent wrong.

The technology is ready. The workflows aren't.

A Framework for Effective AI Upskilling

If the problem is treating AI as a training challenge when it's actually a workflow challenge, the solution isn't just better courses. It's a fundamental shift in how we approach capability building.

For decades, L&D professionals have chased the "Holy Grail" of Just-in-Time Learning—delivering the exact right knowledge at the exact moment a person needs to solve a problem. Generative AI is the closest we've ever come to fulfilling that promise.

The organizations seeing real returns in 2025 didn't just buy better software. They moved away from "Just-in-Case" learning (teaching everyone everything, hoping it sticks) to "Just-in-Time" capability building.

Based on successful implementations across the UK, here's a four-part framework for how to train employees on AI in a way that actually sticks.

1. Kill the "Workshop," Build the "Clinic"

The biggest waste of L&D budget is the mass-enrollment bootcamp where employees learn skills they won't use for three months. AI moves so fast that by the time you need the skill, the interface has changed.

Successful programs think long-term. Instead of a "Learning Day," think in terms of quarterly or annual support. Providers like MMC Learning have found success offering 12-month access to "AI Power Clinics"—drop-in sessions (60–90 minutes) where employees bring specific, real-time challenges.

Think of it as IT support, but for capability. An employee doesn't need a four-hour lecture on "The History of LLMs." They need 15 minutes on "Why is this specific client report hallucinating?"

The difference is sustained access versus a one-time event. When people know they can come back next week with a different problem, they actually experiment. When they know it's their only chance to learn, they play it safe and forget everything by Tuesday.

The Fix: Shift your budget from one-off events to sustained access. Create "office hours" where the agenda is set by the employees' current friction points, not an external curriculum.

2. Stop Teaching "Prompting." Start Teaching "Workflow Repair."

The most effective training doesn't start with the tool. It starts with the "grumble"—the part of the job everyone hates.

Analysis of 1,500 L&D conversations reveals that successful adoption rarely looks like a vendor's "ideal flow." It looks like messy, creative experimentation.

Here's what this looks like in practice:

The Manufacturing Example: One company didn't train engineers on "generative writing." They identified a bottleneck: engineers hated writing formal instructions. The solution? Train them to write messy, raw notes, then use AI to rewrite them into polished documentation.

The Legacy Content Example: Another team didn't learn "AI theory." They simply used the tools to modernize the tone of old training files, solving an immediate backlog problem.

In both cases, the "training" was invisible. It was wrapped inside a solution to a painful workflow problem.

This is the shift from teaching tools to teaching solutions. You're not asking "How do I use ChatGPT?" You're asking "How do I get this report done in half the time without losing quality?"

The Fix: Audit your workflows, not your skills. Find the friction. Train people to solve that specific friction. Diagnostic pilots reveal the actual skills gaps—like accuracy flagging or compliance checking—far better than a generic skills matrix.

3. Replace "Fear" with "Agency" (The NHS Model)

Here's a stat that matters: 42% of UK employees fear AI will replace some of their job functions. If your training ignores this, you're planting seeds in frozen ground.

The antidote to fear isn't cheerleading. It's agency.

The NHS offers a perfect case study. Their successful radiology training didn't just teach the tech; it explicitly covered ethical principles and emphasized that AI is there to augment clinicians, not replace them.

By teaching employees where the AI fails—and why a human is legally and ethically required to be in the loop—you flip the narrative. You aren't training them to be replaced; you're training them to be the safety net.

This approach addresses the psychological barriers head-on. 86% of employees question whether AI outputs are accurate. Instead of dismissing that concern, successful programs validate it. They show people how to critique AI outputs, how to spot hallucinations, and where human judgment is non-negotiable.

The Hard Truth: Some roles are at risk. Administration, accountancy, and coordination roles are being automated. You'll have employees who know, deep down, that AI threatens their core function.

You need to map out what that role looks like on the other side. Not vague promises, but specific skills that AI cannot replicate. And you must do this with the person affected—don't do this in isolation with your HRBP.

The Fix: Build psychological safety into the training design. Show people where AI fails. Teach them to be the quality control layer. Give them agency over how they use the tools, not just instructions to follow.

4. Build Governance That Enables, Not Blocks

Shadow AI is now normal. One recent study found that around four in ten employees have shared sensitive work information with AI tools without their employer’s knowledge. Another reported that 43% of workers admitted to pasting sensitive documents, including financial and client data, into AI tools.

This "Shadow AI" problem isn't a compliance issue. It's a symptom of broken governance.

When IT blocks the tools employees need, people don't stop using AI—they just stop asking permission. They use ChatGPT on their phones. They upload company data to unauthorized platforms. They create the exact security nightmare you were trying to avoid.

The solution isn't tighter restrictions. It's an AI Council.

This isn't a bureaucratic blocker; it's an enabler. By bringing Legal, IT, and Business Units into a single body, you create a "safe harbor" for experimentation. You move AI from being an "IT project" (which fails 80% of the time) to a business transformation project.

The AI Council sets guardrails, approves use cases, and creates pathways for employees to test tools safely. It replaces "Can we use this?" with "Here's how we use this responsibly."

The Fix: Stop banning tools and start creating approved pathways. Establish an AI Council with cross-functional representation. Make it easy to experiment within guardrails rather than forcing people underground.

How to Actually Measure Success

If you want meaningful learning, stop reporting on completion rates. AI implementation is behavior change. It's also risk reduction—which I know isn't half as exciting as innovation performativity, but it pays the bills.

The problem with traditional L&D metrics is they measure activity, not impact. You know how many people attended. You don't know if anything changed.

Here's how to measure what actually matters when you train employees on AI:

1. Audit Trails Over Quizzes

Most training ends with a knowledge check: "What are three best practices for prompt engineering?" The learner regurgitates what they just heard, passes, and promptly forgets.

Instead, require learners to submit the audit trail of their work—the prompt, the output, and their critique of it. This proves judgment, not just attendance.

For example: "Here's the prompt I used to draft this client proposal. Here's what it generated. Here's what I changed and why."

That submission tells you three things a quiz never will:

Can they spot when AI output is wrong?

Do they understand the limits of the tool?

Are they applying it to real work or just hypotheticals?

The Fix: Replace post-training quizzes with work artifacts. Make people show their process, not recite best practices.

2. Quality Metrics in Real Workflows

If you've trained your sales team to use AI for CRM entries, don't measure how many people completed the module. Measure the strategic depth of CRM entries compared to untrained peers.

Are the entries more detailed? More accurate? Do they include next steps and context that help the next person in the chain?

If you've trained finance to automate reporting, measure cycle time. How long does it take to produce a monthly report now versus before? What's the error rate?

These metrics tie training directly to business outcomes. They also reveal whether people are actually using what they learned or just going through the motions.

The Fix: Identify the business metric you're trying to move before you design the training. Then track that metric, not attendance.

3. Risk Reduction Metrics

Remember the Shadow AI problem? 43% of employees sharing sensitive data with unauthorized tools?

One of the clearest measures of successful AI training is whether that number goes down.

Track:

Reduction in unauthorized tool usage

Decrease in data breaches or near-misses

Increase in employees using approved platforms

Decline in IT helpdesk tickets related to AI confusion

These aren't vanity metrics. They're leading indicators that your governance and training are actually working together.

If people are still sneaking around IT to use ChatGPT on their phones, your training didn't address the real problem—which is that your approved tools are either too restrictive or too clunky.

The Fix: Measure adoption of approved tools and reduction in risky behavior. If Shadow AI persists, your training (or your governance) is missing the mark.

4. Behavioral Indicators Over Self-Reported Confidence

Post-training surveys love to ask: "On a scale of 1-10, how confident are you using AI tools?"

This metric is useless. 30% of employees admit to exaggerating their AI abilities because they feel insecure. You're measuring performance anxiety, not capability.

Better indicators:

How many people are bringing real problems to your AI Clinic sessions?

How many are submitting requests to your AI Council for new use cases?

How many are actively sharing prompts or workflows with colleagues?

These behaviors signal genuine adoption. Someone who's actually using AI will have questions. They'll hit friction points. They'll want to do more.

Silence after training isn't success—it's failure dressed up as compliance.

The Fix: Track active engagement signals—questions asked, use cases proposed, peer-to-peer sharing. These behaviors indicate people are actually experimenting.

The Bigger Picture: Stop Optimizing for the Wrong Outcome

MIT's 2025 study found that 95% of generative AI pilots fail to create measurable value. That's nearly double the failure rate of traditional IT projects.

The reason isn't that the technology doesn't work. It's that organizations measure the wrong things.

They measure training completion while usage stalls. They measure licenses purchased while workflows stay unchanged. They measure AI "readiness" while risk actually increases.

When you shift from measuring inputs to measuring outcomes—quality, speed, risk reduction, behavior change—you expose where the gaps actually are. And that's when you can fix them.

The Real Constraint Isn't Technology

We started this article with a stark reality: there's a 58-point gap between what leadership thinks is happening with AI training and what employees are actually experiencing.

That gap exists because organizations are solving the wrong problem.

The constraint in 2026 isn't technological capability—that gap has been solved. LLMs are easier to use than almost any enterprise software in history. The constraint is human readiness.

Not because people can't learn. UK workers are willing—89% say they want AI skills. But they're being taught buttons when they need solutions. They're attending workshops when they need clinics. They're being measured on attendance when the real question is whether anything changed.

The organizations that figure out how to train employees on AI effectively won't be the ones with the most expensive licenses. They'll be the ones that stopped treating this as a training problem and started treating it as a change management problem.

They'll be the ones that:

Built sustained capability programs instead of one-off workshops

Fixed workflows instead of teaching prompts

Addressed psychological barriers instead of pretending they don't exist

Measured risk reduction and behavior change instead of completion rates

The technology is ready. The question is: are your people?

Next Steps: Let's Build a Program That Actually Works

If you recognize your organization in the failures above—or if you're ready to finally close the adoption gap—we should talk.

At The Human Co., we don't do "tick-box" compliance training. We build the human infrastructure that allows your AI investment to actually pay off.

I'm Paul Thomas, and I help organizations design AI programs that work in the real world, not just on a slide deck.

Here's how we can start:

Not sure where your adoption gaps are?

I've built a 15-minute diagnostic audit that maps your current AI investment against the four failure modes we covered above. It'll show you exactly where you're losing value—and what to fix first.

[Take the diagnostic audit] or [book a 30-minute discussion of your results]

We can also help you:

Run a targeted "Workflow Clinic" for a specific team to solve one expensive problem they face daily

Design your AI Council structure to enable safe experimentation

Audit your current training spend to identify what's working and what's performative

Stop buying ingredients and start learning the recipe.

Why AI Pilots Fail: The Timeline of a Stalled Rollout

We're doing it again.

Deloitte's CTO just told Fortune that what "really scares CEOs about AI" is the people challenge: moving from pilots to production at scale. Then he spent the entire interview talking about technology infrastructure.

I'm not dunking on Bill Briggs. He's clearly smart, and he's identifying the right problem. But the fact that even someone in his position defaults back to discussing data governance when addressing a people problem tells you everything about why 90% of AI implementations fail.

AI adoption in the workplace isn't a technology deployment: it's a behaviour change, and behaviour change is hard.

Why AI Implementation Consulting Misses the Mark

As specialists in AI change management for mid-market UK firms, we've seen these patterns play out first-hand across dozens of implementations. The approach is backwards every single time.

We ask the C-suite what they want AI to do. We ask Heads of Department how it fits their strategy. We run pilots with hand-picked enthusiasts who'd make anything work.

Know who we don't ask? The people whose jobs are about to fundamentally change.

The customer service rep who's about to have every call monitored and scored by AI. The analyst whose entire role is being automated. The middle manager whose team is being "right-sized" because AI can handle the workflow.

These aren't stakeholders to "bring along" after the decision's made. They're the people who determine whether your AI readiness actually translates to results.

The Predictable Pattern of AI Adoption Failure

I've watched this cycle repeat across organisations of every size:

Phase 1: The Ivory Tower Design It begins with the "Spark." Leadership gets excited about AI’s competitive advantage and hands the mandate down. IT teams build impressive demos that function flawlessly—strictly within controlled environments—while consultants draft beautiful, theoretical road-maps filled with phases, milestones, and optimistic success metrics.

Phase 2: The "Big Bang" Rollout The organisation moves to execution with high-energy, company-wide communications. The tool is pushed out to the workforce with the expectation of immediate enthusiasm.

Phase 3: The "Silent Veto" The reality on the ground kicks in. Front-line employees, who weren't consulted, comply just enough to avoid trouble but never truly integrate the tool into their workflow. Adoption hits a ceiling at 30%, and the promised productivity gains fail to materialise.

Phase 4: The Quiet Exit Finally, the pilot is quietly shelved as leadership’s attention shifts to the next shiny initiative. The project is marked as a failure due to "change resistance," leaving the root causes unexamined.

Getty Images

But it's not resistance. It's a completely rational response to being told your job is changing without being part of designing what that change looks like.

What Real AI Capability Building Requires

Ultimately, this isn't just about making employees feel heard, it’s about protecting your investment. If you ignore the people component, you are essentially sabotaging your own data.

What Happens When You Ignore the Human Element

The symptoms of a tech-first approach are distinct and damaging. We see staff building elaborate workarounds to bypass the AI entirely, resulting in data quality so poor that the models become liabilities rather than assets. But the hidden cost is talent. Experienced employees, feeling unheard, often take their institutional knowledge and walk out the door, while those who remain become a demoralized workforce doing the bare minimum.

The Dividend of People-First Capability

Conversely, "doing the human work" pays a specific dividend: Advocacy. When employees are involved early, they stop being obstacles and start providing the practical insights needed to fix implementation glitches in real-time. This leads to faster adoption curves, sustained behavior change, and—most importantly—tangible business outcomes that actually match the initial hype.

The Human Co. People-First Framework for AI Change Management

So, how do we operationalize that advocacy? It starts with The Human Co. People-First Framework.

Redefining the Problem Statement

Sustainable adoption doesn't start with technology; it starts with the humans currently doing the work, and it starts before the problem is even defined. We have to ask the uncomfortable questions early: What are they genuinely worried about? What do they know about the workflow that the roadmap ignores? And crucially, what parts of their job do they actually want AI to take off their plate?

Creating the Support System

Answering those questions is only half the battle; the rest requires a genuine support system. This means replacing "fit it in" with protected learning time, and replacing vague promises with concrete career paths.

It requires an environment of psychological safety, where staff can experiment and fail without consequence. And perhaps most importantly, it demands manager enablement, training leaders to have honest, difficult conversations about role evolution and job security, rather than just cheering from the sidelines.

The Reality

This is not "soft skills" window dressing. This is the difference between a theoretical success in a boardroom deck and a practical success on the front lines.

Why UK Firms Face Unique AI Cultural Shift Challenges

Applying this framework is vital anywhere, but in the UK market, it is non-negotiable. Why? Because the standard Silicon Valley playbook simply doesn't survive contact with British organizational culture.

We've got strong employment protections, unions with actual power, and a workforce that's seen enough "transformation initiatives" to spot corporate nonsense immediately. We're also dealing with:

The UK Context: Why "Move Fast" Breaks

We operate in an environment defined by strong employment protections and unions that (rightly) wield actual power. Combined with a workforce that has endured enough "transformation initiatives" to spot corporate nonsense from a mile away, you have a unique landscape. In this context, the mantra of "move fast and break things" isn't just culturally unacceptable, it’s a liability.

The Compliance and Talent Trap

Beyond the culture, there are structural hurdles. We are dealing with heavily regulated industries where AI strategy must thread the needle of strict compliance frameworks. Simultaneously, we face a geographic talent crunch; AI expertise is concentrated in specific hubs, making the competition for talent fierce. When you combine a skeptical, risk-averse workforce with these external pressures, top-down adoption isn't just ineffective—it is practically designed to fail.

We've written extensively about why AI rollouts fail in UK organizations and how less than 25% of UK firms turn adoption into results. The pattern is consistent: organizations that treat AI as purely a technology challenge miss the cultural and capability components entirely.

What CEOs Should Ask About Their AI Readiness

So, how do you know if you're falling into the trap? You need to move beyond technical due diligence and interrogate your cultural readiness.

The Diagnostic Questions That Matter

True readiness starts with co-creation: asking if the people whose jobs will change have been in the room designing that change from the beginning. It requires radical honesty regarding what this shift means for roles, redundancies, and career progression.

It also demands a hard look at your managerial capability. Do your leaders have the skills to discuss job security and AI without hiding behind corporate jargon? Do your teams have real support—time, training, and psychological safety, or are they expected to figure it out on top of their day jobs?

Finally, you must audit your metrics. Are you measuring actual behavior change, or are you comforting yourself with vanity stats like "login frequency"?

The Reality Check Be honest with your answers. If your internal response is "we'll handle the people side after the pilot," you haven't just delayed success, you've already lost.

The Fundamental Shift: Beyond the Software Rollout

Here is the mindset shift required: You must stop treating AI adoption like a technology rollout and start treating it like what it actually is, asking people to fundamentally change their professional identity while they are often terrified about their future.

This isn't about being "nice" to employees. It is about protecting your investment.

"If your change metrics don't measure behaviour adoption, and only measure system access, you haven't implemented AI. You've simply purchased expensive software."

The organizations that succeed understand this distinction. They invest in manager enablement, create genuine pathways for capability building, and acknowledge that cultural shifts take deliberate effort.

Most importantly, they recognize that the people currently doing the work possess insights that no consultant roadmap can capture. The fastest path to AI success runs directly through the humans you are asking to change.

Ready to Break the Cycle of Failed Pilots?

If you are tired of initiatives that never scale and want to build genuine AI capability, the conversation must start with your people, not your technology stack.

At The Human Co., we specialize in AI change management that actually works for UK mid-market firms. We help you move beyond the excitement of the demo phase to the reality of sustained behaviour change and measurable business results.

The real competitive advantage isn't having the best AI tools; it's having a workforce that actually knows how to use them.

Contact Us to discuss how we can help you avoid the predictable failures and build an AI strategy that delivers on its promises.

7 Mistakes You're Making with AI Change Management (and How Middle Managers Can Fix Them)

AI implementation

Here's what everyone's getting wrong about AI transformation: they think it's a technology problem. The reality? 70% of AI implementations fail not because of the tech, but because of how organisations manage the human side of change.

I've spent the last two years working with mid-market firms across the UK, and the pattern's consistent. Companies are making the same seven critical mistakes with AI change management, mistakes that turn promising transformations into expensive failures. But here's the thing: middle managers are perfectly positioned to fix every single one of them.

Let me show you what's really happening.

Mistake 1: Using Middle Managers as Expensive Admin Assistants

What it looks like: Your middle managers spend their days updating spreadsheets, scheduling meetings, and processing routine approvals, tasks that could easily be automated or delegated.

Why it fails: During AI transformation, you need your middle managers focused on high-value activities: understanding customer needs, coaching teams through change, and spotting resistance before it derails projects. Instead, they're drowning in administrative tasks that add zero value to your AI adoption.

The fix: Audit what your middle managers actually do daily. Then systematically remove every administrative task that doesn't require human judgment. Redirect them toward strategic change leadership, helping teams understand how AI fits into their workflows, coaching employees through skill transitions, and translating executive AI strategy into practical team actions.

What this requires: A ruthless assessment of current responsibilities and the backbone to say "no" to administrative creep.

Mistake 2: Flattening Your Organisation Just When You Need Human Bridges

What it looks like: Following Silicon Valley's "unbossing" trend, you're eliminating middle management layers to create a flatter, more agile structure.

Why it fails: AI transformation creates massive uncertainty. Employees have questions, concerns, and resistance that senior leadership can't address directly. Without middle managers as translators and coaches, your AI rollout becomes a top-down mandate that meets bottom-up rebellion.

The fix: Keep your middle managers, but completely redefine their role. They're not task supervisors anymore: they're change orchestrators who help teams navigate AI integration. They translate strategic vision into practical reality and help employees adapt their skills and mindsets.

A Sheffield-based manufacturing firm learned this the hard way. After cutting their middle management by 40% to "move faster" with AI, they discovered their production teams couldn't effectively collaborate with new AI-driven quality control systems. Bringing back experienced team leaders as "AI integration coaches" turned their implementation around within three months.

Mistake 3: Assuming Managers Will Figure Out AI Training Themselves

What it looks like: You invest heavily in AI tools and executive education, but leave middle managers to work out their own training needs.

Why it fails: Your middle managers need two completely different skill sets: enhanced soft skills for managing anxious teams, plus enough AI literacy to understand what the technology can and can't do. Without both, they can't effectively guide their teams through transformation.

The data's stark: 58% of middle managers report feeling anxious about AI, yet most organisations provide zero structured training to help them become confident AI change leaders.

The fix: Create dual-track training programmes combining emotional intelligence development with practical AI literacy. Your middle managers need to understand prompt engineering, AI limitations, and ethical considerations: not to become technical experts, but to make intelligent decisions about AI integration in their teams.

What this actually requires: Budget allocation for manager development (typically 2-3% of total AI investment) and accepting that training is ongoing, not a one-off workshop.

Mistake 4: Promoting the Wrong People to Manage AI Change

What it looks like: Your best individual contributors get promoted to management roles during AI transformation, regardless of their change leadership capabilities.

Why it fails: Managing AI change requires completely different skills than excelling at individual tasks. Technical expertise doesn't automatically translate to helping resistant employees embrace new ways of working.

The fix: Redesign your management selection criteria around change leadership capabilities. Look for people who can mentor, facilitate difficult conversations, and drive innovation: not just deliver results in their previous role.

Evaluate managers based on their ability to help teams adapt, not just hit traditional performance metrics. One Manchester-based financial services firm transformed their AI adoption by promoting managers specifically for their coaching abilities rather than their technical skills.

Mistake 5: Ignoring the Emotional Reality of AI Change

What it looks like: Your change communications focus on efficiency gains and competitive advantages while ignoring employee fears about job security and skill obsolescence.