Why AI Pilots Fail: The Timeline of a Stalled Rollout

We're doing it again.

Deloitte's CTO just told Fortune that what "really scares CEOs about AI" is the people challenge: moving from pilots to production at scale. Then he spent the entire interview talking about technology infrastructure.

I'm not dunking on Bill Briggs. He's clearly smart, and he's identifying the right problem. But the fact that even someone in his position defaults back to discussing data governance when addressing a people problem tells you everything about why 90% of AI implementations fail.

AI adoption in the workplace isn't a technology deployment: it's a behaviour change, and behaviour change is hard.

Why AI Implementation Consulting Misses the Mark

As specialists in AI change management for mid-market UK firms, we've seen these patterns play out first-hand across dozens of implementations. The approach is backwards every single time.

We ask the C-suite what they want AI to do. We ask Heads of Department how it fits their strategy. We run pilots with hand-picked enthusiasts who'd make anything work.

Know who we don't ask? The people whose jobs are about to fundamentally change.

The customer service rep who's about to have every call monitored and scored by AI. The analyst whose entire role is being automated. The middle manager whose team is being "right-sized" because AI can handle the workflow.

These aren't stakeholders to "bring along" after the decision's made. They're the people who determine whether your AI readiness actually translates to results.

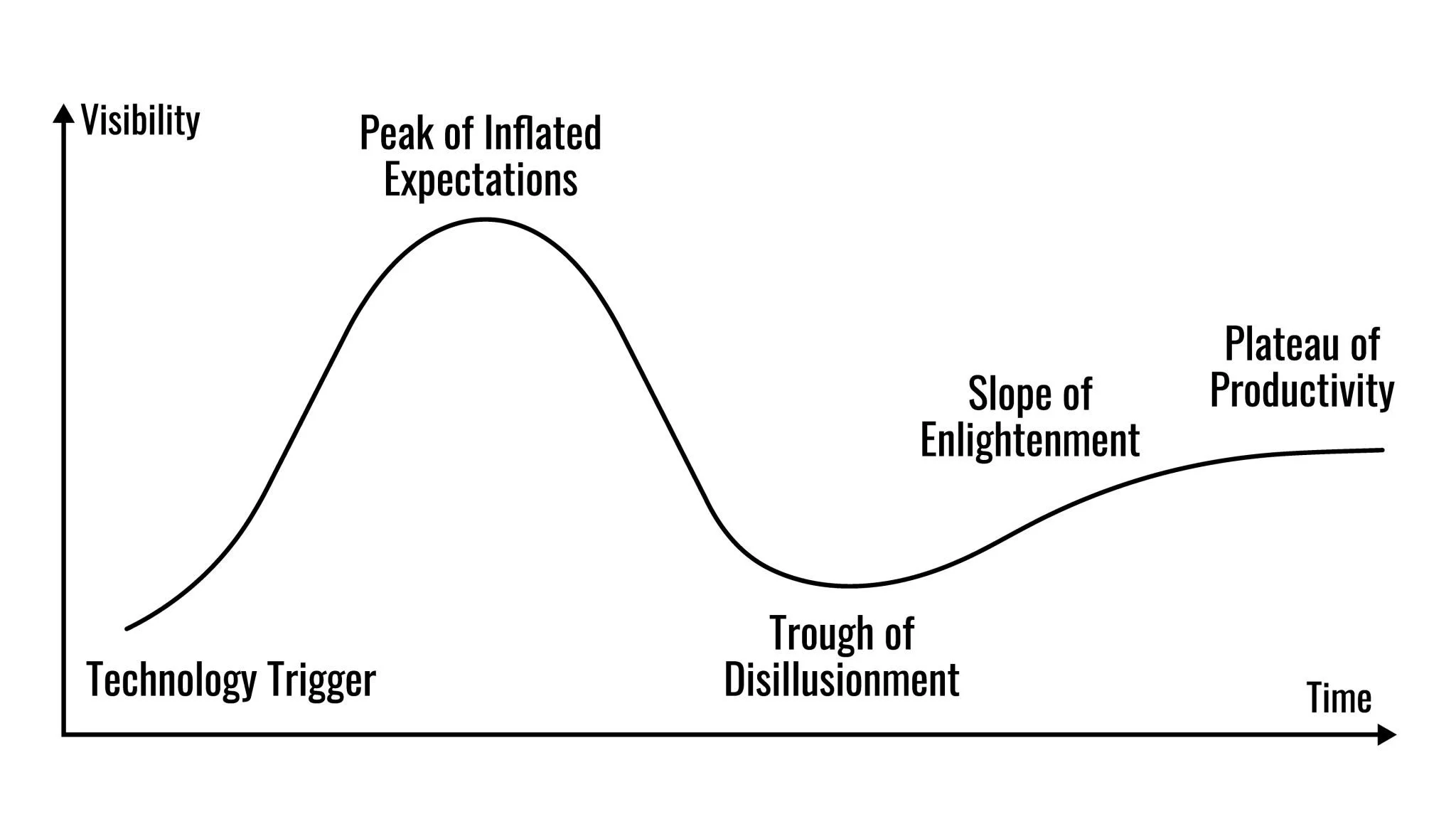

The Predictable Pattern of AI Adoption Failure

I've watched this cycle repeat across organisations of every size:

Phase 1: The Ivory Tower Design It begins with the "Spark." Leadership gets excited about AI’s competitive advantage and hands the mandate down. IT teams build impressive demos that function flawlessly—strictly within controlled environments—while consultants draft beautiful, theoretical road-maps filled with phases, milestones, and optimistic success metrics.

Phase 2: The "Big Bang" Rollout The organisation moves to execution with high-energy, company-wide communications. The tool is pushed out to the workforce with the expectation of immediate enthusiasm.

Phase 3: The "Silent Veto" The reality on the ground kicks in. Front-line employees, who weren't consulted, comply just enough to avoid trouble but never truly integrate the tool into their workflow. Adoption hits a ceiling at 30%, and the promised productivity gains fail to materialise.

Phase 4: The Quiet Exit Finally, the pilot is quietly shelved as leadership’s attention shifts to the next shiny initiative. The project is marked as a failure due to "change resistance," leaving the root causes unexamined.

Getty Images

But it's not resistance. It's a completely rational response to being told your job is changing without being part of designing what that change looks like.

What Real AI Capability Building Requires

Ultimately, this isn't just about making employees feel heard, it’s about protecting your investment. If you ignore the people component, you are essentially sabotaging your own data.

What Happens When You Ignore the Human Element

The symptoms of a tech-first approach are distinct and damaging. We see staff building elaborate workarounds to bypass the AI entirely, resulting in data quality so poor that the models become liabilities rather than assets. But the hidden cost is talent. Experienced employees, feeling unheard, often take their institutional knowledge and walk out the door, while those who remain become a demoralized workforce doing the bare minimum.

The Dividend of People-First Capability

Conversely, "doing the human work" pays a specific dividend: Advocacy. When employees are involved early, they stop being obstacles and start providing the practical insights needed to fix implementation glitches in real-time. This leads to faster adoption curves, sustained behavior change, and—most importantly—tangible business outcomes that actually match the initial hype.

The Human Co. People-First Framework for AI Change Management

So, how do we operationalize that advocacy? It starts with The Human Co. People-First Framework.

Redefining the Problem Statement

Sustainable adoption doesn't start with technology; it starts with the humans currently doing the work, and it starts before the problem is even defined. We have to ask the uncomfortable questions early: What are they genuinely worried about? What do they know about the workflow that the roadmap ignores? And crucially, what parts of their job do they actually want AI to take off their plate?

Creating the Support System

Answering those questions is only half the battle; the rest requires a genuine support system. This means replacing "fit it in" with protected learning time, and replacing vague promises with concrete career paths.

It requires an environment of psychological safety, where staff can experiment and fail without consequence. And perhaps most importantly, it demands manager enablement, training leaders to have honest, difficult conversations about role evolution and job security, rather than just cheering from the sidelines.

The Reality

This is not "soft skills" window dressing. This is the difference between a theoretical success in a boardroom deck and a practical success on the front lines.

Why UK Firms Face Unique AI Cultural Shift Challenges

Applying this framework is vital anywhere, but in the UK market, it is non-negotiable. Why? Because the standard Silicon Valley playbook simply doesn't survive contact with British organizational culture.

We've got strong employment protections, unions with actual power, and a workforce that's seen enough "transformation initiatives" to spot corporate nonsense immediately. We're also dealing with:

The UK Context: Why "Move Fast" Breaks

We operate in an environment defined by strong employment protections and unions that (rightly) wield actual power. Combined with a workforce that has endured enough "transformation initiatives" to spot corporate nonsense from a mile away, you have a unique landscape. In this context, the mantra of "move fast and break things" isn't just culturally unacceptable, it’s a liability.

The Compliance and Talent Trap

Beyond the culture, there are structural hurdles. We are dealing with heavily regulated industries where AI strategy must thread the needle of strict compliance frameworks. Simultaneously, we face a geographic talent crunch; AI expertise is concentrated in specific hubs, making the competition for talent fierce. When you combine a skeptical, risk-averse workforce with these external pressures, top-down adoption isn't just ineffective—it is practically designed to fail.

We've written extensively about why AI rollouts fail in UK organizations and how less than 25% of UK firms turn adoption into results. The pattern is consistent: organizations that treat AI as purely a technology challenge miss the cultural and capability components entirely.

What CEOs Should Ask About Their AI Readiness

So, how do you know if you're falling into the trap? You need to move beyond technical due diligence and interrogate your cultural readiness.

The Diagnostic Questions That Matter

True readiness starts with co-creation: asking if the people whose jobs will change have been in the room designing that change from the beginning. It requires radical honesty regarding what this shift means for roles, redundancies, and career progression.

It also demands a hard look at your managerial capability. Do your leaders have the skills to discuss job security and AI without hiding behind corporate jargon? Do your teams have real support—time, training, and psychological safety, or are they expected to figure it out on top of their day jobs?

Finally, you must audit your metrics. Are you measuring actual behavior change, or are you comforting yourself with vanity stats like "login frequency"?

The Reality Check Be honest with your answers. If your internal response is "we'll handle the people side after the pilot," you haven't just delayed success, you've already lost.

The Fundamental Shift: Beyond the Software Rollout

Here is the mindset shift required: You must stop treating AI adoption like a technology rollout and start treating it like what it actually is, asking people to fundamentally change their professional identity while they are often terrified about their future.

This isn't about being "nice" to employees. It is about protecting your investment.

"If your change metrics don't measure behaviour adoption, and only measure system access, you haven't implemented AI. You've simply purchased expensive software."

The organizations that succeed understand this distinction. They invest in manager enablement, create genuine pathways for capability building, and acknowledge that cultural shifts take deliberate effort.

Most importantly, they recognize that the people currently doing the work possess insights that no consultant roadmap can capture. The fastest path to AI success runs directly through the humans you are asking to change.

Ready to Break the Cycle of Failed Pilots?

If you are tired of initiatives that never scale and want to build genuine AI capability, the conversation must start with your people, not your technology stack.

At The Human Co., we specialize in AI change management that actually works for UK mid-market firms. We help you move beyond the excitement of the demo phase to the reality of sustained behaviour change and measurable business results.

The real competitive advantage isn't having the best AI tools; it's having a workforce that actually knows how to use them.

Contact Us to discuss how we can help you avoid the predictable failures and build an AI strategy that delivers on its promises.