Why Microsoft Copilot Rollouts Stall at 20% Adoption: The Pattern Nobody Talks About

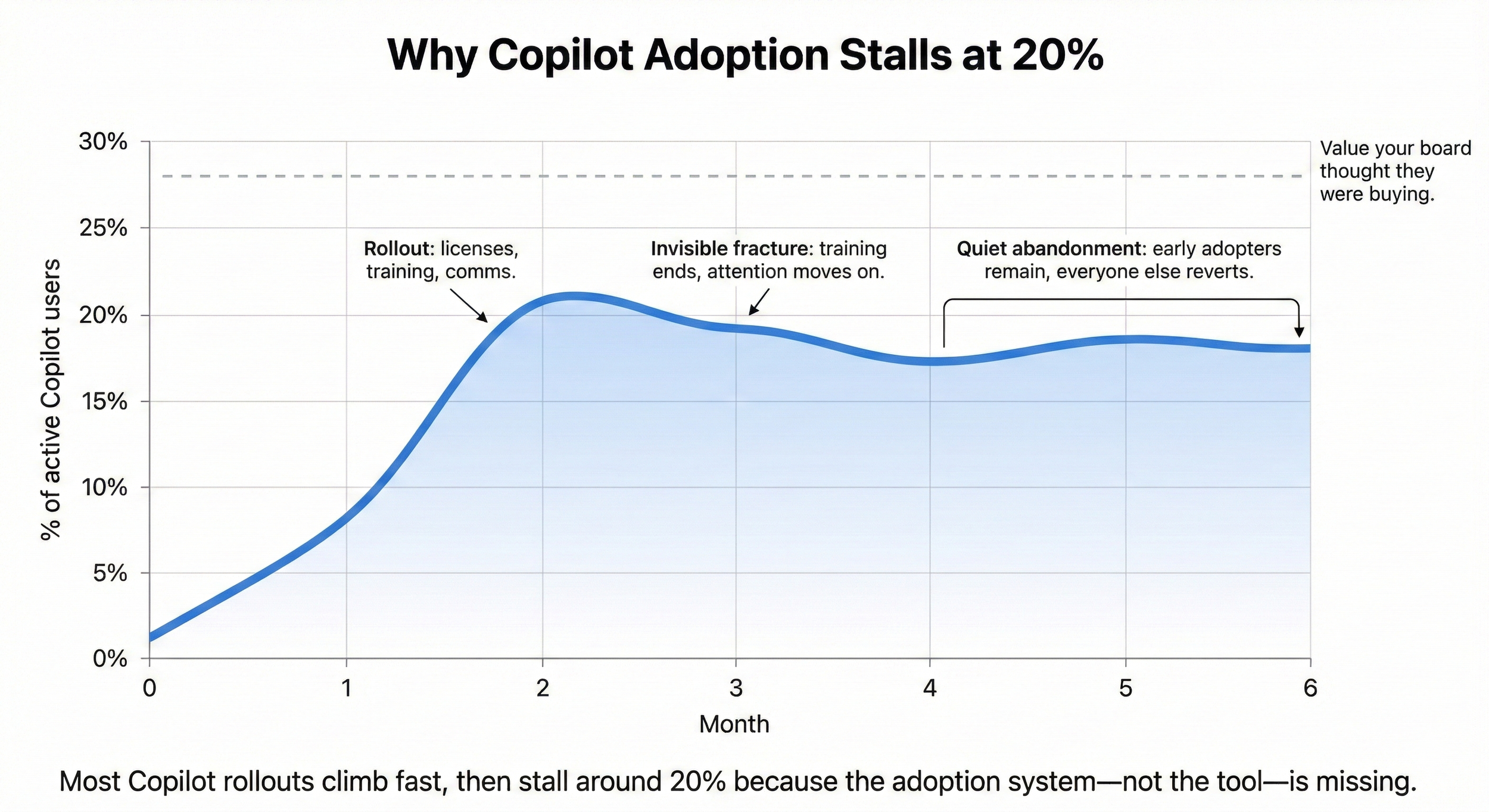

The pattern shows up consistently in Copilot rollouts, UK government pilots, enterprise case studies, Microsoft's own research. Initial enthusiasm. Usage climbs for 6-8 weeks. Then it stalls, typically between 15-25% adoption, often settling around 20%.

I've led digital transformation programs from inside mid-market organizations. I know what this looks like when you're the one responsible for making adoption work, when the consultants have left, the training's been delivered, and usage quietly flatlines. What's invisible in the case studies and pilot reports is why it happens. That's what I now diagnose: the organizational design gaps that training programs miss and deployment teams don't see until it's too late.

What's remarkable isn't that this happens, it's that it happens so predictably, and almost nobody sees it coming. Not the technology team who planned the rollout. Not the L&D team who designed the training. Not the executives who approved the budget.

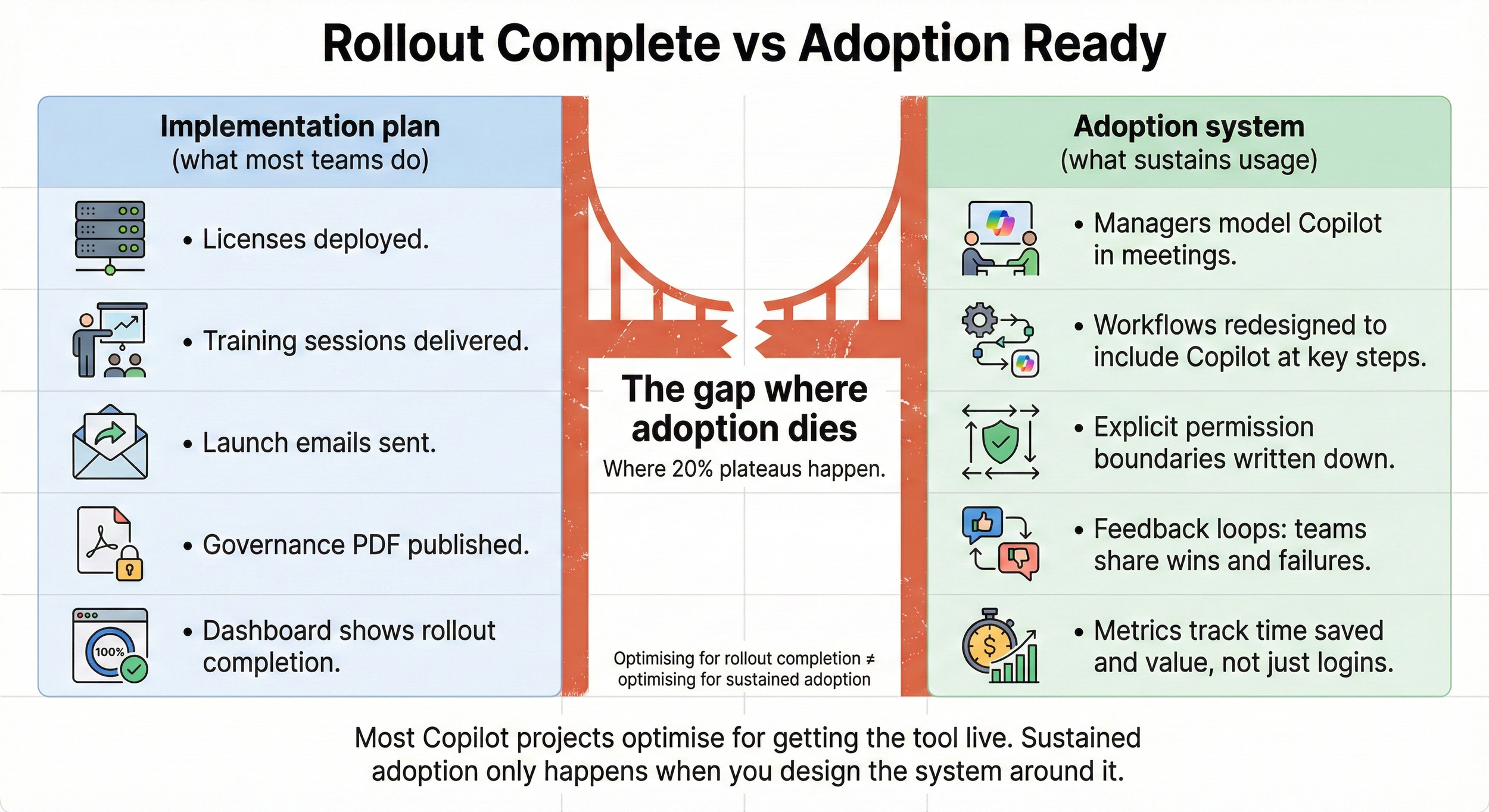

The problem isn't the product. Microsoft Copilot is technically sound. The problem is that organizations redesign the tool rollout without redesigning the adoption system around it. And that invisible gap, the one between 'we rolled out the tool' and 'people sustainably use the tool', is where your adoption dies.

The Real Cost Nobody Calculates

You're paying £25-35 per user per month for licenses that sit unused. For a 1,000-person firm at 20% adoption, that's £240,000 annually on unused software.

Microsoft's own research suggests Copilot users save 10-12 hours per month. At 80% non-adoption, you're leaving 9,600 hours of productivity on the table every month. That's not a rounding error—it's real capacity you paid for but can't access.

And then there's the political cost. You told the board this would transform productivity. Six months later, nobody's using it. Now every future technology decision gets the question: "Will this be like Copilot?"

What makes it harder: you can't just blame the tool. It works—your 20% prove that. You can't blame the people—they went to the training, they tried it, they had good reasons for stopping. You can't even blame the implementation team—they followed the playbook Microsoft provided.

The problem is the playbook itself. It optimizes for rollout completion, not sustained adoption. Those are different outcomes, and almost nobody designs for the second one.

What Happens After Rollout

The standard Copilot rollout looks like this:

Month 1-2: Initial deployment

IT sets up licenses and permissions. L&D delivers training sessions. Communications team sends announcement emails. Early adopters experiment. Usage metrics look promising.

Month 3: The invisible fracture

Training ends. Executive attention moves elsewhere. Users are "on their own." No feedback loop exists to tell them if they're using it well. No workflow redesign to make Copilot easier than the old way. Managers assume "it's working" because they received completion metrics, not usage metrics.

Month 4-6: The quiet abandonment

Users revert to familiar tools. Nobody notices because there's no adoption measurement system. The 20% who stick with it become isolated—seen as "the tech people." Leadership discovers the problem only when someone pulls usage data for a board report.

The pattern I see in organizations is that they design an implementation plan but not an adoption system.

An implementation plan answers: How do we roll this out?

An adoption system answers: How do we make sustained usage the path of least resistance?

They're not the same thing.

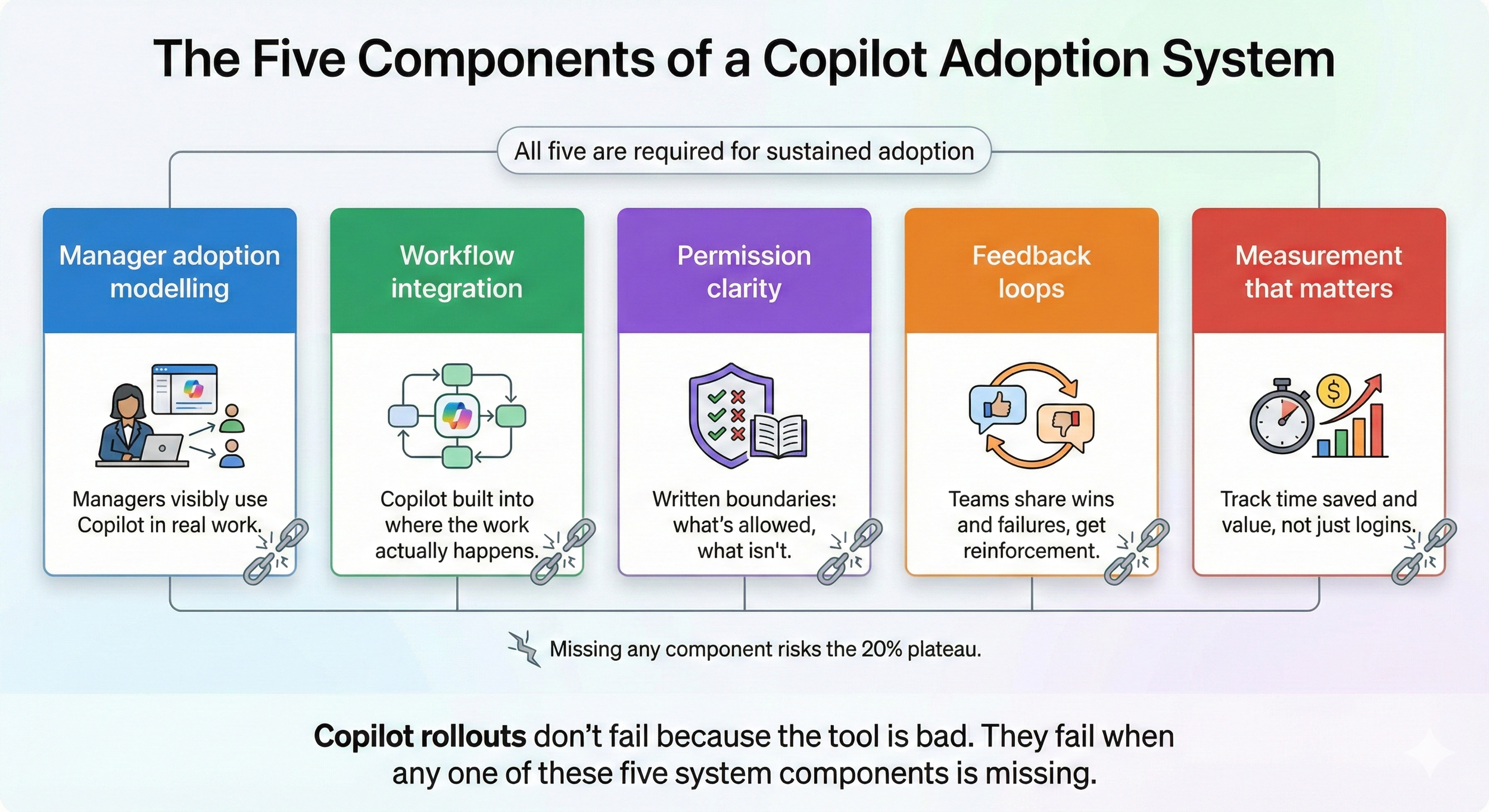

The Five Missing System Components

When I audit stalled Copilot rollouts, I'm looking for five things that should exist but almost never do. These aren't theoretical—I've led transformation programs where the absence of these components killed adoption, and I've seen what happens when they're built properly.

1. Manager adoption modeling

Employees don't adopt tools their managers don't visibly use. But nobody told managers that modeling usage was part of their job. They approved the rollout; they assumed that was enough. It wasn't.

This happens all the time in transformation programs. Managers will be positive initially—they'll attend a launch event, encourage their teams to go to training. But they don't show up to the training themselves. Lack of time is the usual reason given.

What I've observed repeatedly: the managers who don't show up are usually leading the least engaged teams in the organization. It's not a coincidence. Their absence sends a signal louder than any encouragement email.

When managers model usage—actually use the tool visibly in team meetings, share what they learned, show their failures—adoption follows. When they don't, their teams quietly opt out. No amount of training compensates for a manager who signals "this isn't important enough for my time."

2. Workflow integration

Copilot sits inside Microsoft 365 tools. But if your team's daily workflow doesn't naturally open those tools, Copilot never gets a chance. You've added a capability without redesigning the workflow to incorporate it.

I've seen this play out badly and well. The bad version: tools and processes designed purely for HR convenience with little insight into how the organization actually works. Performance management platforms are notorious for this—they get dumped on the organization, and nobody uses them without being berated by their HRBP.

The good version? I've seen it work at multi-site organizations, but it takes tremendous effort and research. You need a team of people working on workflow integration for 6-12 months for something big like a new HRIS. Not just rolling out the tool—actually redesigning how work happens to incorporate it.

For Copilot, this means mapping where your teams actually work. If they live in Salesforce, you need Copilot integrated there. If they live in project management tools, that's where it needs to be accessible. Don't make them leave their workflow to access productivity.

3. Permission clarity

You told people "use Copilot responsibly." They heard "don't make a mistake." In the absence of clear parameters, cautious employees opt out. They're not resisting, they're protecting themselves.

I've watched this pattern destroy adoption repeatedly. Leadership says "everyone is welcome to attend training" or "everyone should use this tool." Then a Head of Department undermines that message—"we need shift coverage" or "we're stretched"—but really they're protecting their budget or their team's time.

Employees hear mixed messages and default to the safest interpretation: don't use it. Especially in regulated environments or client-facing work, ambiguity kills experimentation.

What actually works: explicit boundaries written down. Not "use it responsibly" but "You can use Copilot for internal documents, drafting emails, research summaries. You cannot use it for client contracts until legal confirms." Clarity unlocks experimentation. Ambiguity kills it.

4. Feedback loops

Nobody tells users if they're doing it well. So they assume they're not. Early experiments feel clumsy. Without positive reinforcement, they stop experimenting.

In one digital literacy campaign I led at a 7,000-person organization, we surveyed people about how they liked to receive resources (typically delivered as classroom-style training). 30% said Slack. We opened a Slack channel. In the first month, 1,000 users signed up.

Why? Because it created a feedback loop. People could see what their peers were doing, ask questions, share wins, and learn in real-time. It normalized experimentation. Someone would post "Used Copilot to draft meeting notes, saved 30 minutes" and three other people would try it that afternoon.

Without feedback loops, people experiment once, don't know if they did it "right," and stop. With feedback loops—whether it's a Slack channel, office hours, or weekly team check-ins—learning accelerates and adoption becomes self-sustaining.

5. Measurement that matters

You're tracking "usage"—did they open it? You're not tracking value delivery—did it save them time, improve output, reduce friction? So you don't know what's working.

This happens all the time in transformation programs. People want easy wins. A 36% engagement rate gets declared a success. Complaints get sidelined or excused—the person raising them is labeled pessimistic or difficult.

I've watched organizations celebrate "45% adoption" when the actual pattern was: 45% of people opened the tool once, tried something, decided it wasn't worth it, and never came back. That's not adoption—that's failed experimentation being rebranded as success.

The measurement shift that matters: stop counting logins. Start asking "Did this save you time? Improve your work? Reduce friction?" Track outcomes, not activity. Otherwise, you're measuring the wrong thing and missing the actual adoption failure.

Why Your Leadership Team Missed This

Your leadership team optimized for the wrong success metric.

They measured: Rollout completion

✓ Licenses deployed

✓ Training delivered

✓ Communications sent

✓ Governance framework published

What they should have measured: Adoption system readiness

✗ Do managers understand their role in modeling usage?

✗ Have workflows been redesigned to incorporate Copilot?

✗ Can employees articulate clear permission boundaries?

✗ Does a feedback loop exist to reinforce good usage?

✗ Do we measure value delivery, not just tool access?

Nobody is incompetent here. This happens because adoption systems are invisible until they're not there.

Three assumptions that kill adoption

Assumption 1: "Training equals adoption"

Training teaches how to use the tool. It doesn't create the conditions where using it is easier than not using it. Those are different problems.

I've seen firms deliver exceptional training—clear, practical, well-attended. Adoption still stalled. Why? Because the training answered "how does this work?" but didn't solve "why is reverting to the old way still easier?"

Assumption 2: "If people need it, they'll use it"

Only if using it is frictionless. If reverting to the old way is easier—even slightly—they revert. Humans optimize for cognitive ease, not theoretical productivity.

This isn't laziness. It's how brains work under time pressure. When someone has 47 things on their task list, they default to the method that requires the least mental overhead. If Copilot adds friction (even one extra click, one moment of uncertainty about permissions, one unanswered question about whether they're doing it right), they revert.

Assumption 3: "Technology problems have technology solutions"

This isn't a technology problem. Copilot works. This is an organizational design problem. You've inserted a new capability into an unchanged system. The system rejected it.

I keep telling clients: you don't have an AI problem. You have an adoption system problem that's surfacing through AI. The same gaps would kill any tool rollout. Copilot just made them visible.

Why this is hard to see from inside

You're too close to it. When you're inside the organization:

You know the strategy (employees don't)

You know the training was optional or mandatory (creates different adoption psychology)

You know what leadership meant by 'use responsibly' (employees interpreted it differently)

You know the governance is actually permissive (employees experienced it as restrictive)

You know adoption is strategically important (employees think it's just another tool)

The gap between leadership's mental model and employees' lived experience is where adoption dies.

You need an external diagnostic perspective—not because you're not smart enough to figure it out, but because you can't see your own organizational blind spots. It's the same reason therapists have therapists and editors need editors. Proximity creates distortion.

What Needs to Shift

Based on leading transformation programs and analyzing stalled rollouts, this is what actually needs to happen. Not a checklist—a system redesign.

Reframe manager responsibility

Managers need to understand: adoption is now part of their job. Not "encourage your team to try it." Actively model it. Use Copilot visibly in team meetings. Share what you learned. Show failures. This isn't optional,it's leadership.

The shift: from "I approved this" to "I demonstrate this."

Redesign workflows, not just add capabilities

Map where your teams actually work. If they live in Salesforce, bring Copilot into Salesforce workflows. If they live in Slack, integrate there. Don't make them leave their workflow to access productivity.

I had one firm redesign their morning stand-up structure to include a 60-second "what did you use Copilot for yesterday?" rotation. Not to police usage—to normalize it and accelerate collective learning. Usage jumped 34% in six weeks. Not because people learned new features. Because they learned what their peers were doing and thought: "I could try that."

Articulate explicit permission boundaries

Write down exactly what's allowed. Not "use it responsibly"—that's meaningless. Say: "You can use Copilot for internal documents, drafting emails, research summaries. You cannot use it for client contracts until legal confirms." Clarity unlocks experimentation.

This sounds bureaucratic. It's actually the opposite. Ambiguity kills experimentation. Boundaries create safety.

Build feedback loops

Create spaces where people share what worked. Weekly team check-ins: "Who used Copilot this week? What did you learn?" Not to police usage, to normalize experimentation and accelerate learning. When people can see what their peers are doing and learn from both successes and failures, adoption accelerates organically.

Measure value, not activity

Stop counting "number of times Copilot was opened." Start asking: "Did this save you time? Improve your output? Reduce friction?" Track outcomes, not activity.

I worked with one firm that switched from measuring "daily active users" to measuring "tasks where Copilot demonstrably saved time." The numbers dropped initially (fewer people qualified under the new definition), but the quality of adoption skyrocketed. They stopped celebrating vanity metrics and started celebrating actual value delivery.

What This Isn't

This is not:

Retraining everyone. Training wasn't the problem. I've seen firms do three rounds of training. Usage still stalled. More training doesn't fix a system design gap.

Buying better technology. Copilot works. The product is sound. You don't need a different tool—you need a different adoption approach.

Waiting for people to 'get on board.' They're not resistant. They're navigating an unclear system. Resistance and confusion look similar from a distance but require completely different responses.

Change management grandstanding. Posters and town halls don't fix system design. I've seen firms spend tens of thousands on internal communications campaigns. Adoption moved 3%. Why? Because the underlying system blockers remained untouched.

This is system redesign. It requires diagnosis of what's actually broken, not assumptions about what 'should' work.

The Trade-Off Nobody Tells You

Fixing this takes executive attention and manager time. You'll need:

2-6 months of sustained focus

Manager enablement (not training—enablement in how to model, measure, and support adoption)

Workflow redesign work

Measurement system changes

That's real investment. Not as much as the initial rollout cost, but not trivial either.

The alternative is continuing to pay for unused licenses and explaining to the board why your transformation initiative failed.

Most organizations choose not to fix it. They declare victory at 20% and move on. That's a choice, but it's an expensive one.

I'm not here to tell you which choice is right. I'm here to tell you those are the actual choices, not the sanitized version where "a bit more effort" magically fixes it.

The Four Ways Organizations Try to Fix This (And Why They Fail)

I've watched firms attempt the same four responses. None of them work.

"More training will fix it"

They retrain everyone. Same content, different presenter. Usage stays flat. Why? Training wasn't the blocker. System design was.

I sat through one of these retraining sessions. The trainer was excellent. The content was clear. People paid attention, took notes, asked good questions. Three weeks later, usage was identical to pre-training levels.

When I interviewed participants afterwards, every single one said: "The training was interesting. But my actual work hasn't changed, my manager still doesn't use it, and I still don't know if I'm allowed to use it for work."

Training can't fix system problems.

"Mandate usage and measure compliance"

Leadership declares "everyone must use Copilot for X task." Compliance goes up. Value delivery stays low. Employees do the minimum required, then revert.

I watched one firm mandate that all meeting notes be written using Copilot. Compliance hit 78% within a week. But the notes were worse—generic, missing context, clearly copy-pasted from AI output without thought. People were gaming the metric, not adopting the tool.

Mandates produce compliance, not capability.

"Identify champions to evangelize"

They appoint "Copilot champions" to spread enthusiasm. Champions get excited. Nobody else cares. The gap between champions and everyone else widens.

I've seen this create resentment. Champions become "those people who won't shut up about Copilot." Normal employees tune them out. Worse, it signals to managers that adoption is someone else's job, the champions will handle it.

Champions are useful for answering questions. They're terrible at fixing system design problems.

"Wait for the next version to fix it"

They assume the next Microsoft update will solve it. It won't. The product isn't the problem.

I had one client postpone their adoption recovery work for four months waiting for a Copilot feature update. The update shipped. Adoption stayed flat. Why? Because the feature added capability, not clarity about workflows, permissions, or manager modeling.

Waiting for a product fix when you have a system problem is just expensive procrastination.

Why These All Fail

They treat adoption as a communication or motivation problem. It's not. It's a system design problem.

Your employees aren't resistant. They went to training. They tried it. They had good reasons for stopping:

Their manager doesn't use it (social proof missing)

Their workflow makes it harder to use than the old way (friction too high)

They don't know what's allowed (permission ambiguity)

Nobody confirmed they're doing it right (feedback loop missing)

They can't tell if it's saving time or creating work (measurement gap)

Those aren't motivation problems. Those are design problems.

And design problems require diagnosis, not enthusiasm.

When 20% Adoption Might Be Fine

Some will argue 20% adoption is actually success—that you only need power users, not universal adoption. They're not entirely wrong.

If your strategy is:

Concentrate Copilot in specific roles where ROI is highest (analysts, content creators)

Accept that other roles won't benefit meaningfully

Optimize for depth of usage, not breadth

Then 20% might be the right answer.

But that's rarely the strategy organizations articulated when they bought licenses for everyone. Most firms rolled out broadly, expecting broad adoption. When adoption stalls at 20%, it's not because they decided that was optimal—it's because the system broke and they don't know how to fix it.

The honest diagnostic question is: Did you design for 20%, or did you get stuck at 20%?

If you designed it, you're done.

If you got stuck, you've got a system problem to diagnose.

What to Do If You're Seeing This Pattern

If you're reading this and recognizing your own rollout, you're not alone. This pattern is predictable, not exceptional.

Your training wasn't bad. Your people aren't resistant. Your technology choice wasn't wrong.

What's missing is diagnosis.

You need to understand:

Which of the five system components is actually broken in your context

What your managers think their role is (versus what it needs to be)

Where your workflows create friction (versus where Copilot integrates naturally)

What permission ambiguity exists (that you can't see from inside)

What your current metrics hide (versus what they should reveal)

That's what a forensic adoption audit does. It diagnoses what's actually broken—not what 'should' be broken according to a playbook, but what's broken in your specific organizational context.

No obligation. Just diagnostic clarity.

Paul Thomas is a behavioral turnaround specialist focused on failed AI adoption in UK mid-market firms. After leading digital transformation programs as an HR leader—and watching technically sound rollouts stall for organizational reasons—he now diagnoses why GenAI implementations fail. His forensic approach examines governance gaps, workflow friction, and managerial adoption patterns that external consultants and training programs miss. He works with HR Directors, Finance leaders, and Transformation leads who need diagnostic clarity before committing to recovery. He also writes and publishes The Human Stack - a weekly newsletter with more than 5000 readers on how to lead well in the era of GenAI.